Tutorial: Spatial 101

GEOG-364 - Spatial Analysis

Tutorial 11: Spatial 101

This tutorial is all about creating and manipulating spatial data

Tutorial 11A: Spatial basics

Why treat spatial differently

Geographical data needs special treatment. As well as standard data analysis, we need to tell R that our data has a spatial location on the Earth’s surface. R needs to understand what units our spatial location is in (e.g. metres, degrees..) and how we want the data to appear on plots and maps. R also needs to understand the different types of vector data (e.g. what we mean by a “polygon” or “point”).

To achieve this there are some specialist “spatial” types of data we need to use and several spatial data packages.

We’re going to split this tutorial by vector and raster (field) data

Map Projections

See here for a great overview: https://source.opennews.org/articles/choosing-right-map-projection/

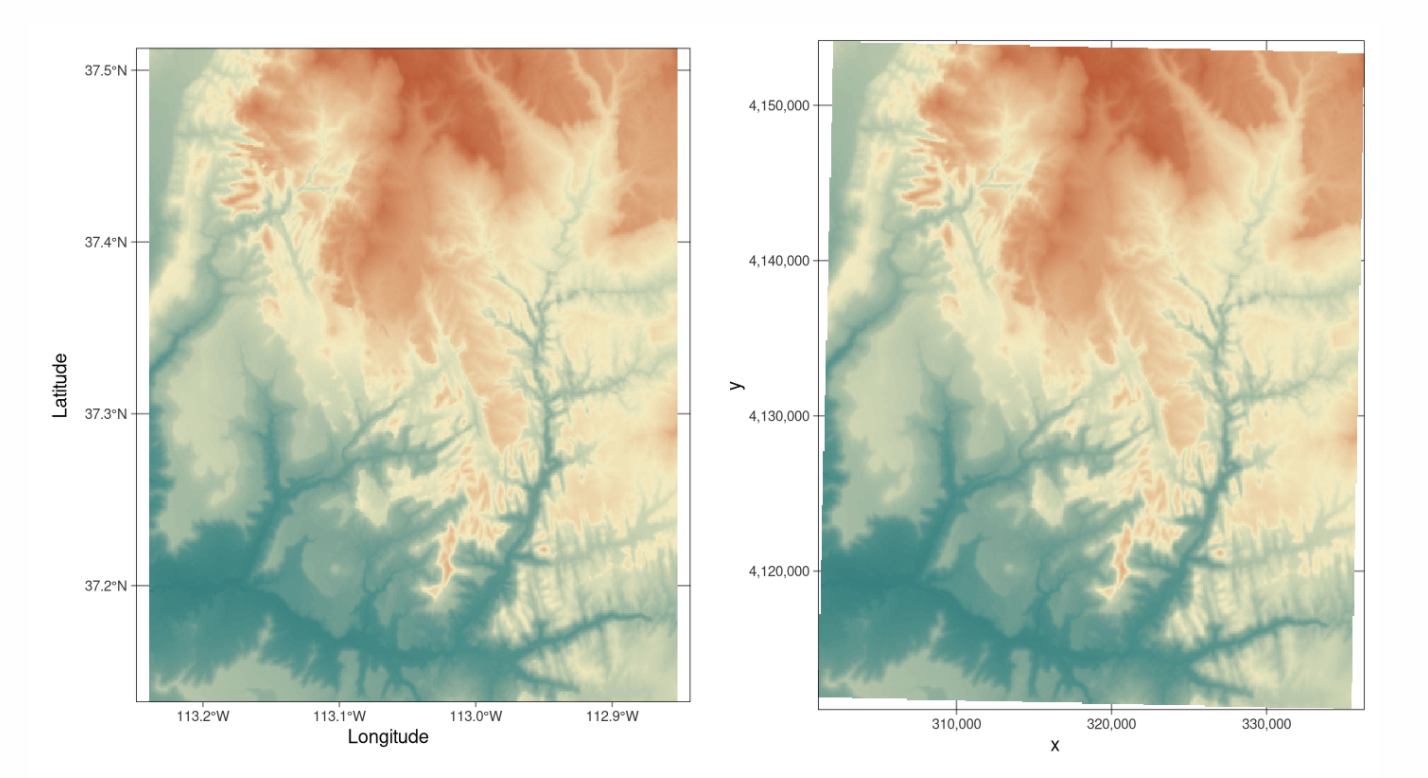

At its simplest, think of our map projections as the “units” of the x-y-z coordinates of geographic data. For example, here is the same map, but in two different projections.

On the left, the figure is in latitiude/longitude in units of degrees.

On the right the same map is in UTM. In the UTM system, the Earth is divided into 60 zones. Northing values are given by the metres north, or south (in the southern hemisphere) of the equator. Easting values are established as the number of metres from the central meridian of a zone.

Examples of geographic coordinate systems for raster data (WGS 84; left, in Lon/Lat degrees) and projected (NAD83 / UTM zone 12N; right, in metres), figure from https://geocompr.robinlovelace.net/spatial-class.html

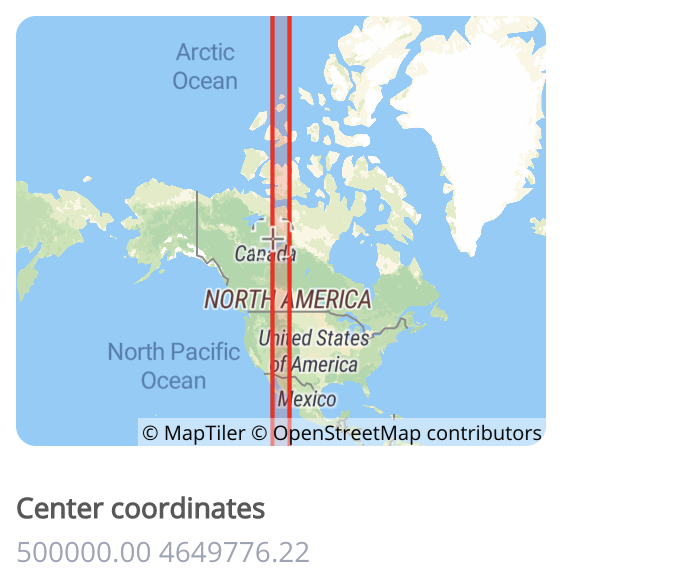

You can see the UTM zone here

Zone 12N: https://epsg.io/32612

Each map projection has a unique numeric code called an EPSG code. To find them, I tend to use these resources, but in this course I will try to provide the codes

- google & reading the dataset documentation

- https://epsg.io

- https://mangomap.com/robertyoung/maps/69585/what-utm-zone-am-i-in-

R is stupid. It has no idea what units or projection your coordinates are in

We need to tell R what units/projection/EPSG code your data is orginally in

THEN we need to convert it to the units/projection/EPSG we need for analysis

We will go into how to do this in each tutorial.

Tutorial 11B: Vector Data

As you know, vector data are “objects” you can “pick up and move around” on a map. So points, lines, polygons, volumes etc.

There are several families of commands available to manipulate vector spatial data.

- sp : The original spatial package.

- Find a detailed tutorial here: https://rspatial.org/raster/spatial/3-vectordata.html

- Find a detailed tutorial here: https://rspatial.org/raster/spatial/3-vectordata.html

- terra : Another new spatial package that we shall ignore.

- Detailed tutorial here: https://rspatial.org/terra/spatial/3-vectordata.html

- Detailed tutorial here: https://rspatial.org/terra/spatial/3-vectordata.html

- sf : A newer spatial package that fits into the tidyverse family.

- Detailed tutorial here: https://r-spatial.github.io/sf/articles/sf1.html

- Detailed tutorial here: https://r-spatial.github.io/sf/articles/sf1.html

- spatstat: A specific package for point pattern analysis

Spatial data packages are like competing mafia families. Some commands will only work with one spatial data type, so normally I will store my spatial data as each type. e.g. I will name my variables:

- mydata : My raw data (R doesn’t understand this is spatial)

- mydata.sp : The sp version of my data

- mydata.sf : The sf version of my data

a. Marked data

It is very important to understand whether your spatial data is “marked”.

Un-marked vector data means that we just know about the location of the spatial objects (points, polygons..). For example, the location of crimes, the location of city boundaries etc. We can assess if these objects are clustered together, spread out etc..

Marked vector data has some attribute e.g. we know some information about each point/polygon. For example, with our weather station data, we know marks such as the Elevation at each location, the distance to the ocean and the average last frost date:

b. Converting a data.frame in R to spatial sf

This is only one route, but it’s the one I use

Step 1: Check what columns your x and y coordinates are stored in.

Look at your data! View your data table and note what the column names your x and y data is stored in. Note, these don’t have to be fancy spatial names, they can be “elephanT” and “popcorn”.

head(frost)## # A tibble: 6 × 8

## Station State Type_Fake Avg_DOY_SpringFrost Latitude Longitude Elevation

## <chr> <chr> <chr> <dbl> <dbl> <dbl> <dbl>

## 1 Valley AL City 110. 34.6 -85.6 1020

## 2 Union AL City 82.3 32.0 -85.8 440

## 3 Saint AL Airport 99.8 34.2 -86.8 800

## 4 Fernandina FL City 46.9 30.7 -81.5 13

## 5 Lake FL City 60.6 30.2 -82.6 195

## 6 West GA City 85.6 32.9 -85.2 575

## # … with 1 more variable: Dist_to_Coast <dbl>Here, we can see that the data coordinates are in columns called “Longitude” and “Latitude”.

Step 2: Check what map projections your x and y coordinates are stored in.

Look at the data inside your x and y columns. Is it longitude/latitude in degrees? A large number (likely metres in UTM), something else? Look at the documentation of your data for clues. If you can find the map projection your data is in then you can google the CRS code.

If your data is in long/lat degrees, then the CRS code 4326 should work. (I got that from this pdf: https://www.nceas.ucsb.edu/sites/default/files/2020-04/OverviewCoordinateReferenceSystems.pdf)

Step 3 Convert to sf using the st_as_sf command

st_as_sf (tablename, coords=c(XColumnName,YColumnName),crs=MapProjection)

For example for our frost data, here is how I turned it into a sf spatial data format. From step 2, I know this is in long/lat coordinates and the crs is 4326.

frost.sf <- st_as_sf(frost,coords=c("Longitude","Latitude"),crs=4326)Now I can check I did it correctly. Here is my attempt at plotting the long/lat data directly. It doesn’t look much like the USA!

plot(frost$Longitude,frost$Latitude)But here you can see the shapes of the USA. R has also tried to plot the marks. All the spatial commands will now work.

plot(frost.sf)Step 4. Check your map projection

There are a LOAD of ways to check the map projection of your data. Perhaps the easiest are the st_crs and crs commands:

st_crs(frost.sf)## Coordinate Reference System:

## User input: EPSG:4326

## wkt:

## GEOGCRS["WGS 84",

## DATUM["World Geodetic System 1984",

## ELLIPSOID["WGS 84",6378137,298.257223563,

## LENGTHUNIT["metre",1]]],

## PRIMEM["Greenwich",0,

## ANGLEUNIT["degree",0.0174532925199433]],

## CS[ellipsoidal,2],

## AXIS["geodetic latitude (Lat)",north,

## ORDER[1],

## ANGLEUNIT["degree",0.0174532925199433]],

## AXIS["geodetic longitude (Lon)",east,

## ORDER[2],

## ANGLEUNIT["degree",0.0174532925199433]],

## USAGE[

## SCOPE["Horizontal component of 3D system."],

## AREA["World."],

## BBOX[-90,-180,90,180]],

## ID["EPSG",4326]]# written like this to force the projection command specifically from the raster package

raster::projection(frost.sf)## [1] "+proj=longlat +datum=WGS84 +no_defs"Here we can see that we assigned our data to be in Lat/Long, with a datum (the shape of the world) of WGS 84 and EPSG/CRS code 4326.

You can use this command on any sf data to check.

Step 5. Assign a new map projection

When we do our plots and analyses, we will often need many layers of data - for example, our points, state borders, city locations, a raster map of temperatures..

Chances are each of these layers is stored using different map projections and units. This means that they won’t plot correctly!

So it’s good practice to make sure all your layers have the same map projection. We do this using the st_transform command:

yoursfvariable <- st_transform (yoursfvariable, crs=NEWNUMBER)

E.g apply the st_transform command to your sf data with the new crs, then assign the output to a variable of the same name to overwrite, or a new name to create a new version with our new projection.

For example, to transform our data to the UTM (the map projection in meters):

- Go here: https://mangomap.com/robertyoung/maps/69585/what-utm-zone-am-i-in- and choose the zone you want.

- I chose a generic US East Coast zone: UTM Zone: 18N.

- You can also choose a “datum” (the shape of the earth’s spheroid).

- For us, let’s always choose WGS 84

- Search for the CRS code of that zone here: https://epsg.io . E.g search

UTM Zone XX WGS 84- For example for me: https://epsg.io/?q=UTM+zone+18N+WGS+84

- This brought up code 32618: https://epsg.io/32618

- Apply the command. Here I made three versions, one with lat/long, one with UTM and one with a polar stereographic projection. I often add the projection to the end of the variable name to keep things neat.

frost.sf.lonlat <- st_transform(frost.sf, 4326)

frost.sf.utm <- st_transform(frost.sf, 32618)

frost.sf.polar <- st_transform(frost.sf, 3995)Let’s see what we did

raster::projection(frost.sf.lonlat)## [1] "+proj=longlat +datum=WGS84 +no_defs"# YOU CAN SEE THE MAP UNITS ARE IN METRES!

crs(frost.sf.utm)## Coordinate Reference System:

## Deprecated Proj.4 representation:

## +proj=utm +zone=18 +datum=WGS84 +units=m +no_defs

## WKT2 2019 representation:

## PROJCRS["WGS 84 / UTM zone 18N",

## BASEGEOGCRS["WGS 84",

## DATUM["World Geodetic System 1984",

## ELLIPSOID["WGS 84",6378137,298.257223563,

## LENGTHUNIT["metre",1]]],

## PRIMEM["Greenwich",0,

## ANGLEUNIT["degree",0.0174532925199433]],

## ID["EPSG",4326]],

## CONVERSION["UTM zone 18N",

## METHOD["Transverse Mercator",

## ID["EPSG",9807]],

## PARAMETER["Latitude of natural origin",0,

## ANGLEUNIT["degree",0.0174532925199433],

## ID["EPSG",8801]],

## PARAMETER["Longitude of natural origin",-75,

## ANGLEUNIT["degree",0.0174532925199433],

## ID["EPSG",8802]],

## PARAMETER["Scale factor at natural origin",0.9996,

## SCALEUNIT["unity",1],

## ID["EPSG",8805]],

## PARAMETER["False easting",500000,

## LENGTHUNIT["metre",1],

## ID["EPSG",8806]],

## PARAMETER["False northing",0,

## LENGTHUNIT["metre",1],

## ID["EPSG",8807]]],

## CS[Cartesian,2],

## AXIS["(E)",east,

## ORDER[1],

## LENGTHUNIT["metre",1]],

## AXIS["(N)",north,

## ORDER[2],

## LENGTHUNIT["metre",1]],

## USAGE[

## SCOPE["Engineering survey, topographic mapping."],

## AREA["Between 78°W and 72°W, northern hemisphere between equator and 84°N, onshore and offshore. Bahamas. Canada - Nunavut; Ontario; Quebec. Colombia. Cuba. Ecuador. Greenland. Haiti. Jamica. Panama. Turks and Caicos Islands. United States (USA). Venezuela."],

## BBOX[0,-78,84,-72]],

## ID["EPSG",32618]]crs(frost.sf.polar)## Coordinate Reference System:

## Deprecated Proj.4 representation:

## +proj=stere +lat_0=90 +lat_ts=71 +lon_0=0 +x_0=0 +y_0=0 +datum=WGS84

## +units=m +no_defs

## WKT2 2019 representation:

## PROJCRS["WGS 84 / Arctic Polar Stereographic",

## BASEGEOGCRS["WGS 84",

## DATUM["World Geodetic System 1984",

## ELLIPSOID["WGS 84",6378137,298.257223563,

## LENGTHUNIT["metre",1]]],

## PRIMEM["Greenwich",0,

## ANGLEUNIT["degree",0.0174532925199433]],

## ID["EPSG",4326]],

## CONVERSION["Arctic Polar Stereographic",

## METHOD["Polar Stereographic (variant B)",

## ID["EPSG",9829]],

## PARAMETER["Latitude of standard parallel",71,

## ANGLEUNIT["degree",0.0174532925199433],

## ID["EPSG",8832]],

## PARAMETER["Longitude of origin",0,

## ANGLEUNIT["degree",0.0174532925199433],

## ID["EPSG",8833]],

## PARAMETER["False easting",0,

## LENGTHUNIT["metre",1],

## ID["EPSG",8806]],

## PARAMETER["False northing",0,

## LENGTHUNIT["metre",1],

## ID["EPSG",8807]]],

## CS[Cartesian,2],

## AXIS["easting (X)",south,

## MERIDIAN[90,

## ANGLEUNIT["degree",0.0174532925199433]],

## ORDER[1],

## LENGTHUNIT["metre",1]],

## AXIS["northing (Y)",south,

## MERIDIAN[180,

## ANGLEUNIT["degree",0.0174532925199433]],

## ORDER[2],

## LENGTHUNIT["metre",1]],

## USAGE[

## SCOPE["Polar research."],

## AREA["Northern hemisphere - north of 60°N onshore and offshore, including Arctic."],

## BBOX[60,-180,90,180]],

## ID["EPSG",3995]]Step 6. Make a sp version

Now we have the data in the projection we want, let’s store an sp version just in case we need it.

To do this we use the “as” command. change the sf format to “Spatial” (sp) format.

# NOTE, I have changed the variable name from sf to sp!

frost.sp.lonlat <- as(frost.sf.lonlat, "Spatial")

frost.sp.utm <- as(frost.sf.utm, "Spatial")

frost.sp.polar <- as(frost.sf.polar, "Spatial")For some commands, you might get an error using the sf version, so now you also have a convenient sp version

Step 7. ALL COMMANDS

Here are all the commands in one place for future labs. See how i’m using code comments to keep things neat.

## [1] "Station" "State" "Type_Fake"

## [4] "Avg_DOY_SpringFrost" "Latitude" "Longitude"

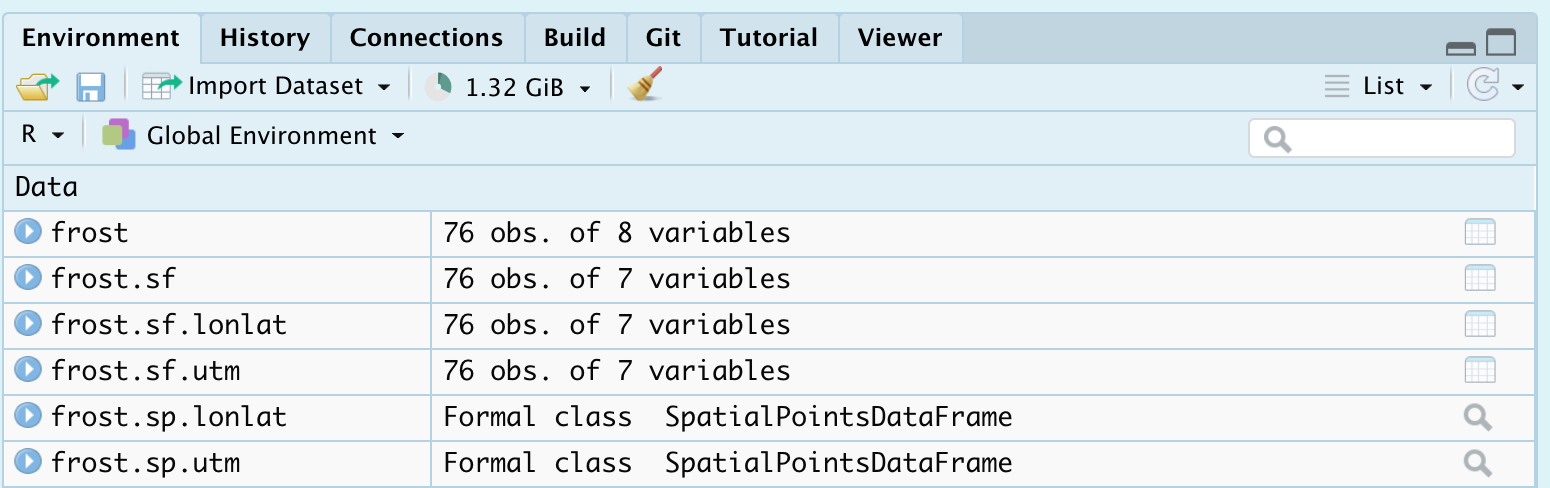

## [7] "Elevation" "Dist_to_Coast"The advantage of naming them this way is that it’s now really easy to find stuff in your environment tab. For example I can immediately see that if I want the latlon sf version of the frost dataset, I would go to frost.sf.lonlat

c. Using RNaturalEarth built-in vector datasets

Let’s now also include some vector-line data on top of our points, but adding in some regional administrative boundaries. In later labs, we will learn how to read in vector data from a file, but this time we are going to use data that is already built into R.

This is part of the rnaturalearth package, which links automatically with the “Natural Earth” dataset, found here: https://www.naturalearthdata.com/features/

First, if you haven’t already, download the high-resolution data in rnaturalearth by running this command in the console:

remotes::install_github("ropenscilabs/rnaturalearthhires")For administrative border data, we can use the ne_countries or the ne_statescommands.

For example, ne_countries will load the entire world borders and assign it to a variable called worldborder.

# You can choose if you want the output to be sf or sp data

worldborder.sf <- ne_countries(scale = "medium", returnclass = "sf")

# st_geometry just means plot the borders

plot(st_geometry(worldborder.sf))# You can choose if you want the output to be sf or sp data

UK.country.sf <- ne_countries(country="united kingdom",returnclass = "sf",scale = "medium")

plot(st_geometry(UK.country.sf))If you want states/regions for your country, you can use the command ne_states().

# You can choose if you want the output to be sf or sp data

UK.regions.sf <- ne_states(country="united kingdom",returnclass = "sf")

plot(st_geometry(UK.regions.sf))Let’s improve our frost plot

US.states.sf <- ne_states(country="united states of america",returnclass = "sf")

# Transform to UTM

US.states.sf.utm <- st_transform(US.states.sf,crs=32618)

plot(st_geometry(frost.sf.utm),col="red",pch=16)

plot(st_geometry(US.states.sf.utm),add=TRUE)d. Manipulating sf data

Manipulating your spatial data is actually exactly the same as manipulating your dataframes. You can access columns, filter, select etc in exactly the same way. You might simply see some additional messages saying that the data comes from a “spatial” data frame.

For example, to print the first 10 rows:

head(frost.sf.lonlat)## Simple feature collection with 6 features and 6 fields

## Geometry type: POINT

## Dimension: XY

## Bounding box: xmin: -86.82 ymin: 30.18 xmax: -81.47 ymax: 34.57

## Geodetic CRS: WGS 84

## # A tibble: 6 × 7

## Station State Type_Fake Avg_DOY_SpringFrost Elevation Dist_to_Coast

## <chr> <chr> <chr> <dbl> <dbl> <dbl>

## 1 Valley AL City 110. 1020 295.

## 2 Union AL City 82.3 440 122.

## 3 Saint AL Airport 99.8 800 252.

## 4 Fernandina FL City 46.9 13 1.15

## 5 Lake FL City 60.6 195 63.0

## 6 West GA City 85.6 575 187.

## # … with 1 more variable: geometry <POINT [°]>To filter for just Florida and Alabama stations below 500feet and save to a new variable

frost.FL.sf.lonlat <- dplyr::filter(frost.sf.lonlat, State %in% c("FL","AL"))

frost.FL.sf.lonlat <- dplyr::filter(frost.sf.lonlat, Elevation < 500)To make a table of stations in each state in our new dataset

table(frost.FL.sf.lonlat$State,frost.FL.sf.lonlat$Type_Fake)##

## Agricultural_Research_Station Airport City

## AL 1 0 1

## FL 1 0 5

## GA 3 3 5

## NC 0 1 7

## SC 4 2 3

## VA 0 3 0Or check the maximum elevation in our new dataset

max(frost.FL.sf.lonlat$Elevation)## [1] 490e. Plotting vector data

See tutorial 12.

Tutorial 11C: Raster Data

Raster basics

To come!

Website created and maintained by Helen Greatrex. Website template by Noli Brazil