Project: Downloading data

GEOG-364 - Spatial Analysis

Set up and libraries

My example tutorial is set in Iowa. First, I will download two data-sets, a point dataset on farmers markets, and the Iowan SVI data. I then merge in other data-sets.

library(sp)

library(sf)

library(elevatr)

library(USAboundaries)

library(raster)

library(tidycensus)

library(rnaturalearth)

library(tmap)

library(exactextractr)

library(units)

library(readxl)

library(FedData)CSV and XLSX data

All xlsx and csv files

Make sure that library(readxl) , library(sp) and library(sf) are in your library code chunk at the top.

Some point data might be stored in Shapefile format. If so, load directly as an sf variable using the next tutorial.

Other point data is stored as a csv, a text file or as an excel file.

To read this data in, see Tutorial 6a and Tutorial 6b.

This link contains the csv used in this tutorial of Iowan farmers markets. I downloaded this, then read it into R, made it spatial, changed the map projection and made a quick plot.

IA_farmersmarket <- readxl::read_excel("Farmers_Markets.xlsx")

names(IA_farmersmarket)## [1] "X" "Y" "FID" "City" "County"

## [6] "Latitude" "Location" "Longitude" "Market_Name" "Open_Dates"

## [11] "Open_Hours" "State" "Weekday"# Note, X and Y because that's what the columns are called.

# I know that it was in lat/long originally, hence the crs

IA_farmersmarket.sf <- st_as_sf(IA_farmersmarket,coords=c("X","Y"),crs=4326)

# And change to a map projection of your choice. I am choosing UTM Iowa

IA_farmersmarket.sf <- st_transform(IA_farmersmarket.sf,3744)

# make a quick plot

tmap_mode("view")## tmap mode set to interactive viewingqtm(st_geometry(IA_farmersmarket.sf))Trouble shooting

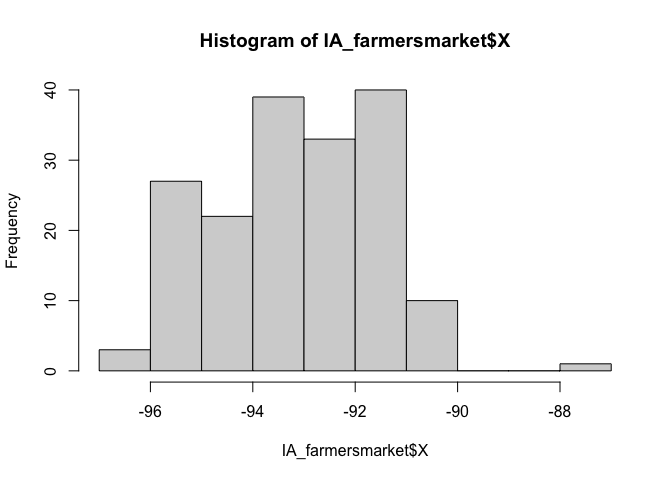

You can see in the plot above that there appears to be one point that is not in Iowa. So now I will look at the long/lat columns.

summary(IA_farmersmarket$X)## Min. 1st Qu. Median Mean 3rd Qu. Max.

## -96.56 -94.37 -93.10 -93.12 -91.78 -87.12hist(IA_farmersmarket$X)

I feel that maybe that point at Long ~ -88 is wrong. Let’s take a look.

IA_farmersmarket[IA_farmersmarket$X > -90,]## # A tibble: 1 × 13

## X Y FID City County Latitude Location Longitude Market_Name

## <dbl> <dbl> <dbl> <chr> <chr> <dbl> <chr> <dbl> <chr>

## 1 -87.1 37.3 9 Central City LINN 37.3 South 5t… -87.1 Atlantic F…

## # … with 4 more variables: Open_Dates <chr>, Open_Hours <chr>, State <chr>,

## # Weekday <chr>I’m guessing there’s a typo - so I could just google this and fix it! but for now, let’s remove and try again.

# choose all the other ones, note the <= instead of >

IA_farmersmarket <- IA_farmersmarket[IA_farmersmarket$X <= -90,]

# Note, X and Y because that's what the columns are called.

# I know that it was in lat/long originally, hence the crs

IA_farmersmarket.sf <- st_as_sf(IA_farmersmarket,coords=c("X","Y"),crs=4326)

# And change to a map projection of your choice. I am choosing UTM Iowa

IA_farmersmarket.sf <- st_transform(IA_farmersmarket.sf,3744)

# make a quick plot

tmap_mode("view")## tmap mode set to interactive viewingqtm(st_geometry(IA_farmersmarket.sf))Shapefiles

Downloading a Shapefile (point or polygon)

There’s also another example in Tutorial 6C

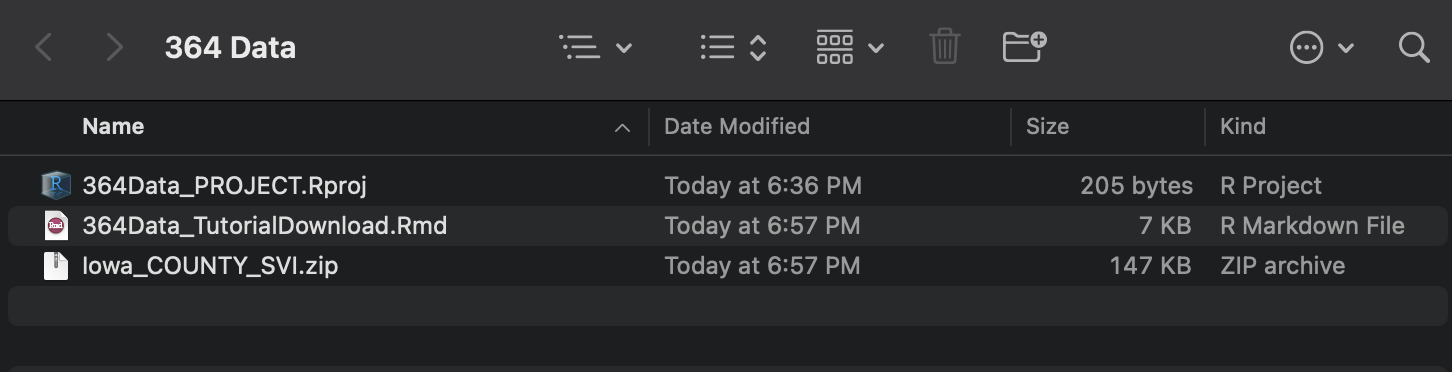

I’m going to use an SVI shape-file that we used in Lab 6. I have stored this on github, so I can download it directly. Note, a shape-file is a group of files TOGETHER that are often stored in a single folder.

This link contains the Shapefile used in this tutorial of Iowan SVI data. I downloaded it and put it in my project folder. I downloaded a zip file

You can unzip the file this using this command, or double click on the zip file on your computer.

unzip("Iowa_COUNTY_SVI.zip")This should unzip all the files into your project folder. Note that the file-names might be different to the zipfile name!

Sometimes this will unzip these into a sub-folder. You can deal with this, but it is easier to just move ALL the sub-files into the main project folder.

Opening a Shapefile

Make sure that library(sp) and library(sf) are in your library code chunk at the top.

The command to read in any shapefile is st_read() from the sf library. Set it to read in the .shp extension. You should see something like this, but remember that you can suppress output using message=FALSE,warning=FALSE and results=FALSE at the top of the code chunk.

# Note the .shp extension

# and that the filename is different to the zip file name!

SVI_county.sf <- st_read("SVI2018_IOWA_county.shp")## Reading layer `SVI2018_IOWA_county' from data source

## `/Users/hlg5155/Dropbox/My Mac (E2-GEO-WKML011)/Documents/GitHub/Teaching/Geog364-2021/SVI2018_IOWA_county.shp'

## using driver `ESRI Shapefile'

## Simple feature collection with 99 features and 125 fields

## Geometry type: POLYGON

## Dimension: XY

## Bounding box: xmin: -96.6397 ymin: 40.3755 xmax: -90.14006 ymax: 43.5012

## Geodetic CRS: NAD83# and change to a map projection of your choice. I am choosing UTM Iowa

SVI_county.sf <- st_transform(SVI_county.sf,3744)To check it worked, click on the name of the variable in the environment tab and plot the geometry

#Remember st_geometry removes the marks.

plot(st_geometry(SVI_county.sf))

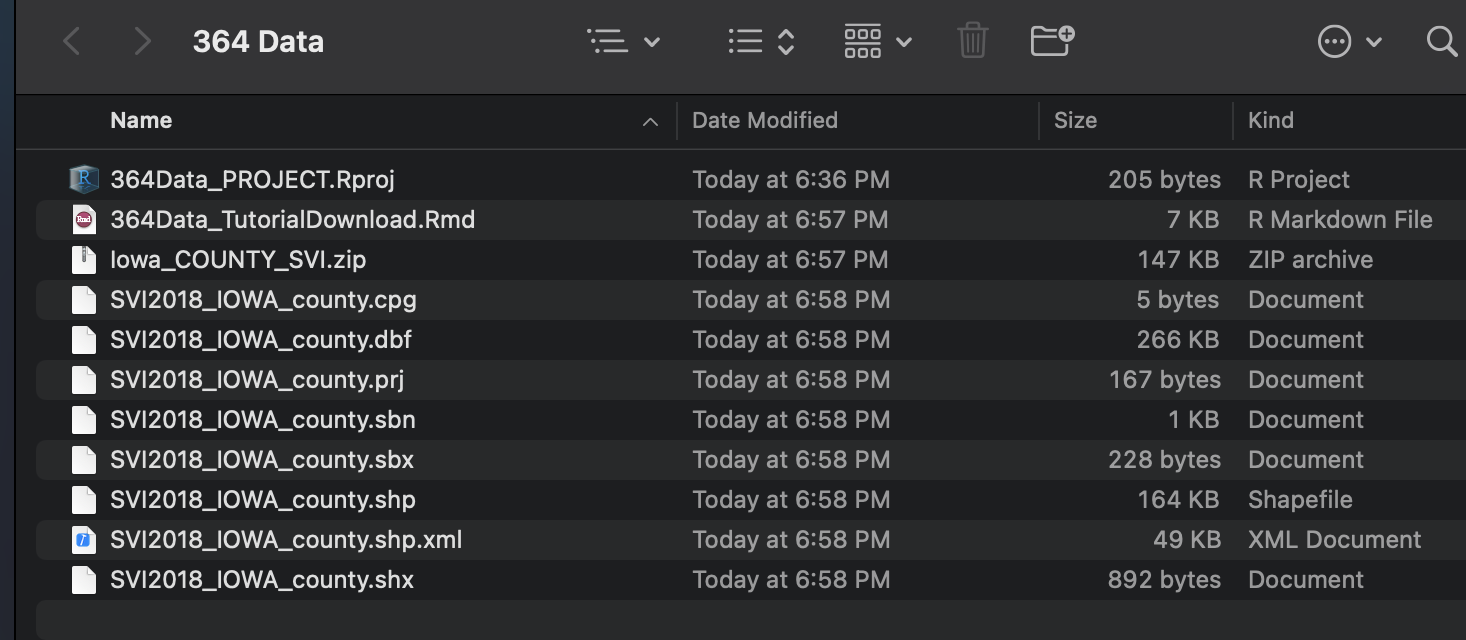

Troubleshooting

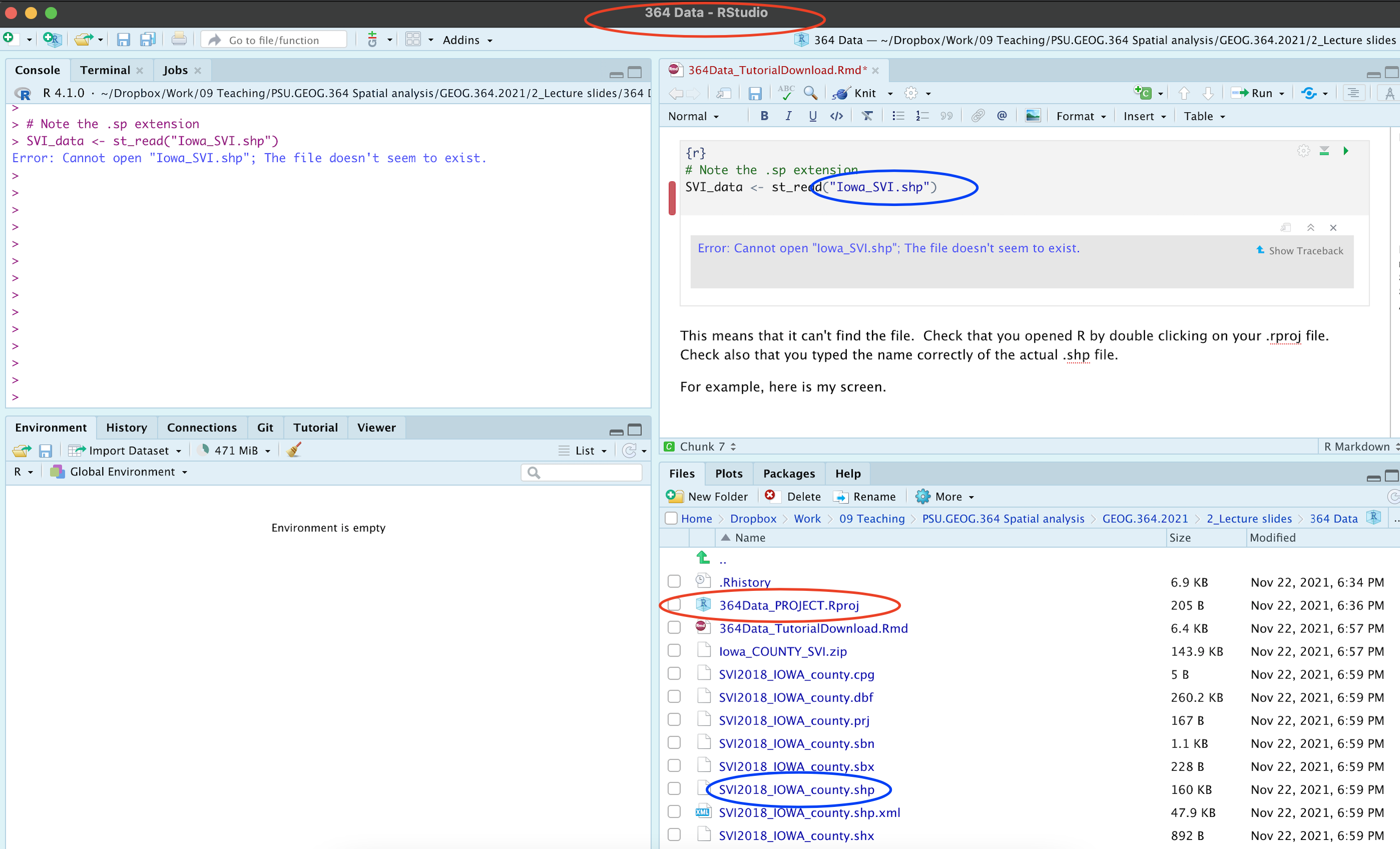

Sometimes you will get this error.

This means that it can’t find the file. There are three common reasons for this.

- You didn’t create a project, or open you didn’t open R-studio by double clicking your project file. If you have no idea what I mean, see Tutorial 2C.

- You unzipped your shape-file into a different place. You should be able to see them next to your code in your files tab with zero sub-folders.

- You mis-typed the file-name. It is case sensitive and requires the .shp at the end.

Let’s see what I did wrong. Here is my screen. You can see that I am running my R-project correctly (red circles) and I have unzipped all my files correctly. But! I got the wrong file name.

Raster data (geotifs, netcdf)

Put the raster in your project folder. This is likely a geotif (.tif) or a NetCDF file (.nc).

Read in the data using the raster command

WhatYouWantToCallYourVariable <- raster('FILENAME.tif')If your data is numeric, to extract for your points/polygons follow the tutorial in elevation

If your data is categorical, to extract for your points/polygons, follow the tutorial for landcover.

Borders

Country, State borders

There are many ways of doing this, see Tutorial 11 for more detail.

I am going to use the rnaturalearth package. Make sure library(rnaturalearth) is in your library code chunk at the top.

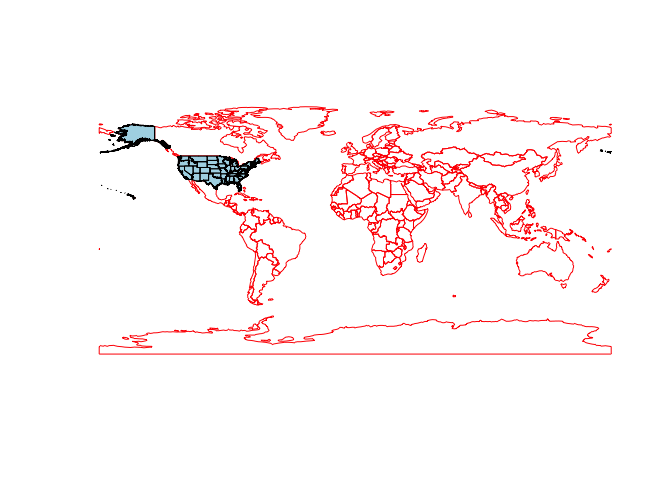

# world borders, read in

border.country.world.sf <- ne_countries(returnclass = "sf")

# US States

border.state.USA.sf <- ne_states(country="united states of america",returnclass = "sf")

# Make a plot to check

plot(st_geometry(border.country.world.sf),border="red")

plot(st_geometry(border.state.USA.sf),col="light blue",add=TRUE)

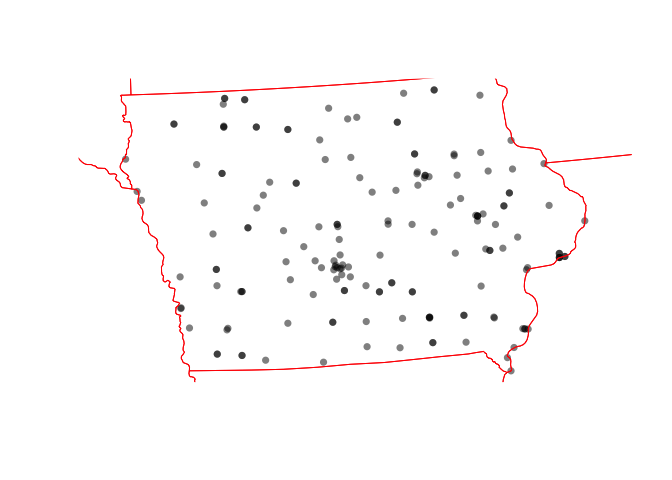

# change projection so the maps overlay just over the Iowa area

border.state.USA.sf <- st_transform(border.state.USA.sf,3744)

plot(st_geometry(IA_farmersmarket.sf),col=rgb(0,0,0,.5),pch=16)

plot(st_geometry(border.state.USA.sf),border="red",add=TRUE) ### rworldmap

### rworldmap

There is also rworldmap that I haven’t explored yet.

#library(rworldmap)

#lines(getMap(resolution = "high"), lwd=2) Counties and census tracts borders

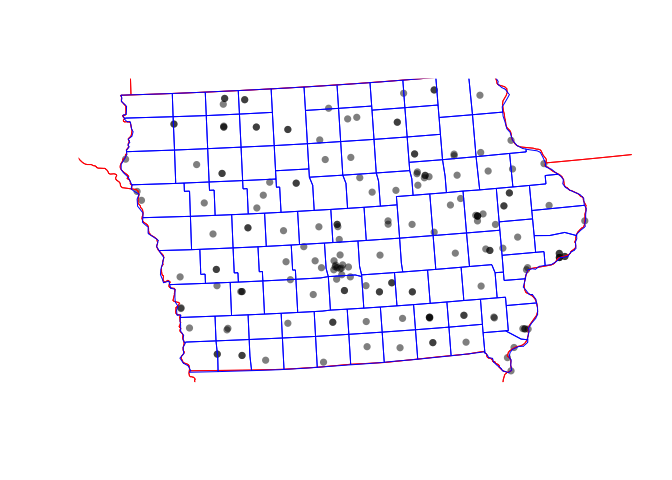

This is often easist to do by using get_acs (see below), but if you really want borders, then the USAboundaries() package is your best bet.

border.county.IA.sf <- us_counties(states="Iowa")

border.county.IA.sf <- st_transform(border.county.IA.sf,3744)

plot(st_geometry(IA_farmersmarket.sf),col=rgb(0,0,0,.5),pch=16)

plot(st_geometry(border.state.USA.sf),add=TRUE,border="red")

plot(st_geometry(border.county.IA.sf),add=TRUE,border="blue")

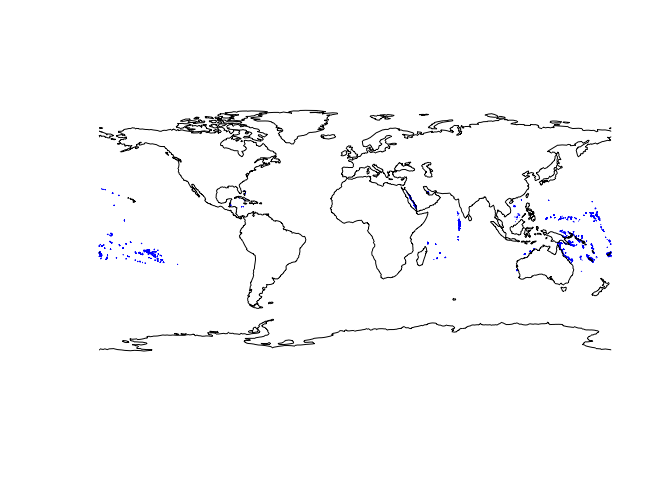

Coastlines and reef data

For those doing coastline projects, you can also download coastline and reef data using rnaturalearth

coast.world.sf <- ne_coastline(returnclass = "sf")

reefs.world.sf <- ne_download( scale = 'large', category="physical", type = 'reefs' ,returnclass = "sf")

plot(st_geometry(reefs.world.sf),col="blue")

plot(st_geometry(coast.world.sf),add=TRUE)

US Census data

The get_acs command

Make sure library(tidycensus) , library(units) ,library(sp) , library(sf) and library(tmap) are loaded in your library code chunk.

This is useful for population density!

There are full tutorials in Tutorial 6Ce and in Lab 7.

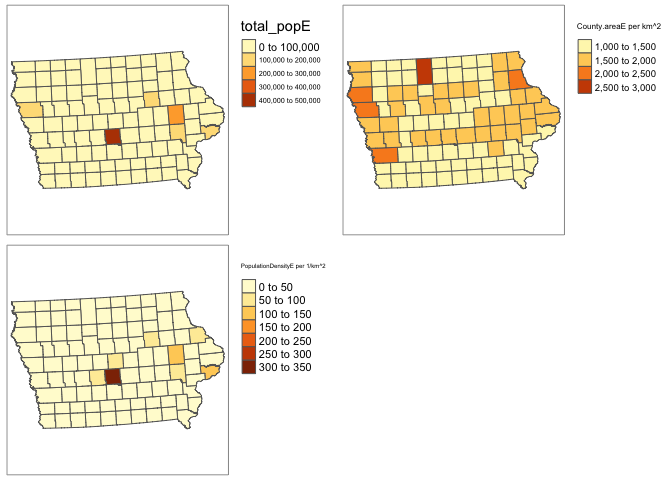

Here is all of the code together:

# Download some data for Iowa using get_acs

ACS_county.sf <- get_acs(geography = "county",

year = 2019,

variables = c(housevalue = "B25075_001", # house value

total_pop = "B05012_001", # total population

total_house = "B25001_001", # total housing units

med.income = "B19013_001"), # median income

state = c("IA"),

survey = "acs5",geometry=TRUE,

output = "wide")

# Change the map projection to UTM Iowa

ACS_county.sf <- st_transform(ACS_county.sf,3744)

# Get the areas

ACS_county.sf$County.areaE <- st_area(ACS_county.sf)

# Change the units of the areas from metres squared to km squared and overwrite

ACS_county.sf$County.areaE <- set_units(ACS_county.sf$County.areaE,"km^2")

# and divide the total population by the area

ACS_county.sf$PopulationDensityE <-ACS_county.sf$total_popE / ACS_county.sf$County.areaE

# check it worked. Iowa is a boring state!

map_totalpop <- qtm(ACS_county.sf,"total_popE")+tm_layout(legend.outside = TRUE)

map_area <- qtm(ACS_county.sf,"County.areaE")+tm_layout(legend.outside = TRUE)

map_popdens <- qtm(ACS_county.sf,"PopulationDensityE")+tm_layout(legend.outside = TRUE)

tmap_mode("plot")

suppressWarnings(tmap_arrange(map_totalpop,map_area,map_popdens))

# clean up your workspace, optional but good practice unless you later need this map

rm(map_totalpop); rm(map_area); rm(map_popdens)Dealing with non-Normal data (e.g. population density)

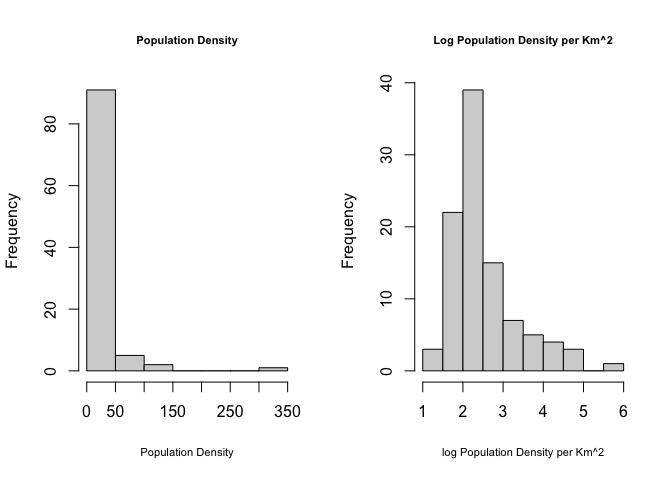

Population density, wealth and housing density are often very skewed, making the statistics hard. To get around this and to see more detail outside cities, we can take the log and save as a new column. For example

ACS_county.sf$Log_PopulationDensityE <- log(ACS_county.sf$PopulationDensityE)Comparing histograms

par(mfrow=c(1,2))

hist(ACS_county.sf$PopulationDensityE,

main=list("Population Density",cex=.7),xlab=list("Population Density",cex=.7))

hist(ACS_county.sf$Log_PopulationDensityE,

main=list("Log Population Density per Km^2",cex=.7),xlab=list("log Population Density per Km^2",cex=.7))

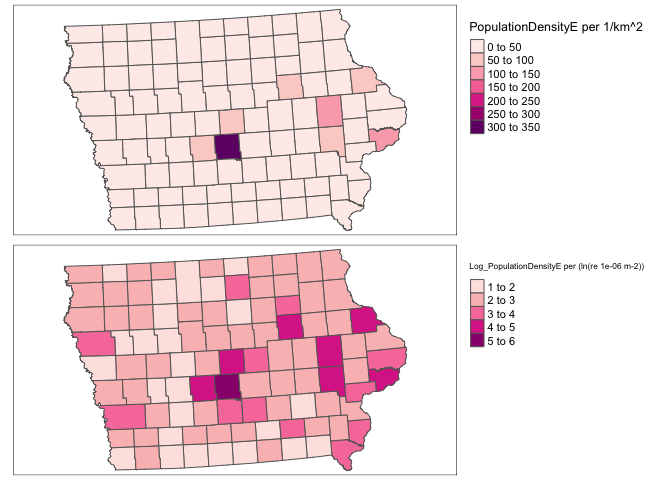

Comparing maps

map_popdens <- qtm(ACS_county.sf,"PopulationDensityE",fill.palette="RdPu")+

tm_layout(legend.outside = TRUE)

map_logpopdens <- qtm(ACS_county.sf,"Log_PopulationDensityE",fill.palette="RdPu")+

tm_layout(legend.outside = TRUE)

tmap_arrange(map_popdens,map_logpopdens)

Extracting Census data at point locations

See the project tutorial:

Elevation data

Downloading the data

Make sure that library(elevatr) and library(raster) are in your library code chunk at the top.

The elevatr package will allow you to download elevation data for a spatial area of your choice. It might break on the cloud. If so, let Dr G know - she can download you a subset.

Here we can extract elevation data just for the area we care about. E.g. here, I will extract for my farmers market data and my county SVI data.

REMEMBER TO PUT results=FALSE IN YOUR CODE CHUNK OPTIONS HERE.

# YOU WILL ONLY NEED ONE OF THESE

# Here I downloaded elevation for the border of my SVI data

elevation.raster_SVI <- elevatr::get_elev_raster(SVI_county.sf, z = 5,clip="locations")

# here I downloaded elevation for the area around my farmers markets (R doesn't understand this is Iowa!)

elevation.raster_farm <- elevatr::get_elev_raster(IA_farmersmarket.sf, z = 5,clip="locations")

# here I downloaded it for the border of Iowa itself using my counties

elevation.raster_IA <- elevatr::get_elev_raster(border.county.IA.sf, z = 5,clip="locations")

# here I created a long/lat box

Lonbox <- c(-93,-93 ,-95,-95)

Latbox <- c( 41, 41.5, 41, 41.5)

box_LongLat <- data.frame(Lon=Lonbox,Lat=Latbox)

box_LongLat.sf <- st_as_sf(box_LongLat,coords=c("Lon","Lat"),crs=4326)

box_LongLat.sf <- st_transform(box_LongLat.sf,3744)

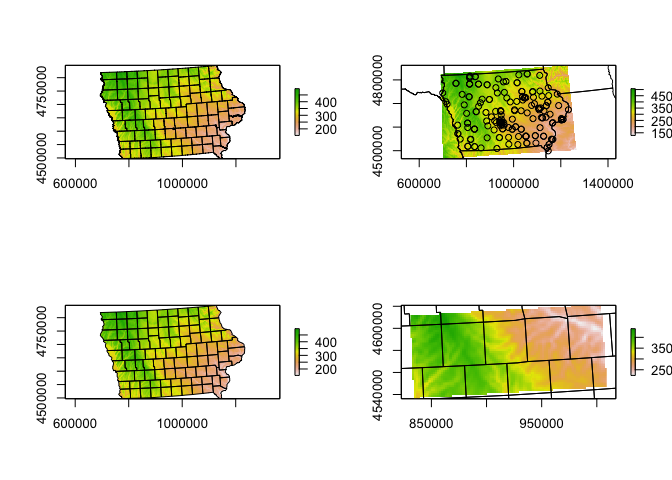

elevation.raster_box <- elevatr::get_elev_raster(box_LongLat.sf, z = 5,clip="bbox")Note when I plot, the results look like they are on a globe because of the UTM map projection.

# set up a 2x3 grid of plots

par(mfrow=c(2,2))

# plot 1

plot(elevation.raster_SVI)

plot(st_geometry(SVI_county.sf),add=TRUE)

# plot 2

plot(elevation.raster_farm)

plot(st_geometry(IA_farmersmarket.sf),add=TRUE)

plot(st_geometry(border.state.USA.sf),add=TRUE)

# plot 3

plot(elevation.raster_IA)

plot(st_geometry(border.county.IA.sf),add=TRUE)

# plot 4

plot(elevation.raster_box)

plot(st_geometry(border.county.IA.sf),add=TRUE)

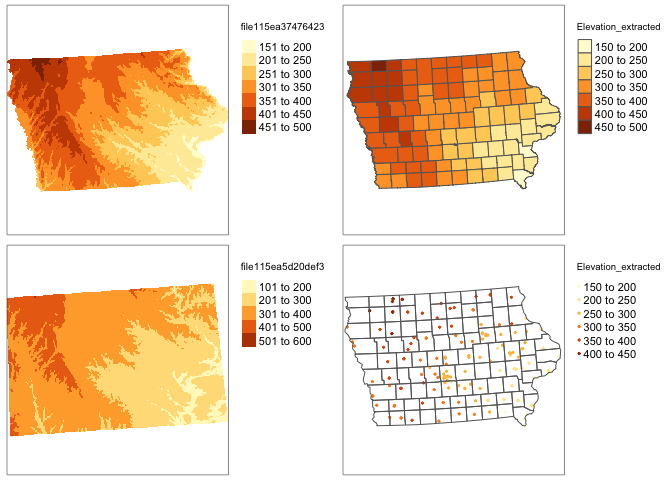

Adding elevation data to your datasets

Make sure that library(exactextractr) and library(raster) are in your library code chunk at the top.

We can simply use this package to extract the value at our locations - I’m choosing the average over the polygon. To extract

# add to polygon data

SVI_county.sf$Elevation_extracted <- exact_extract(elevation.raster_SVI,SVI_county.sf,"mean")For point data, we choose simply the extract function.

# add to point data

IA_farmersmarket.sf$Elevation_extracted <- raster::extract(elevation.raster_farm,IA_farmersmarket.sf)Click on the name of your dataset, you will see there is a new column called elevation. Here is what we have done

# plot 1

map1 <- qtm(elevation.raster_SVI)+tm_layout(legend.outside = TRUE)

map2 <- qtm(SVI_county.sf,"Elevation_extracted")+tm_layout(legend.outside = TRUE)

map3 <- qtm(elevation.raster_farm)+tm_layout(legend.outside = TRUE)

map4 <- qtm(IA_farmersmarket.sf,dots.col="Elevation_extracted")+

tm_shape(border.county.IA.sf)+tm_polygons(alpha=0)+

tm_layout(legend.outside = TRUE)

tmap_arrange(map1,map2,map3,map4)

rm(map1);rm(map2);rm(map3);rm(map4)Land Cover, forest canopy or concrete

(contributed by Susan)

We can download land cover data from the National Land Cover Database (NLCD) (https://www.mrlc.gov/) using the FedData package. This package allows you to download other federal data as well including elevation, soil data from the SSURGO soil database, Daymet weather data, USDA cropland layers, hydrography, etc. See this link (https://github.com/ropensci/FedData) for more information about what data you can obtain using the FedData package. This link has example code for downloading all these data.

First we need to install the ‘FedData’ package. We do this the same way as for the high res data packages. Run this code IN YOUR CONSOLE.

remotes::install_github("ropensci/FedData")Now make sure that these packages are in the code chunk at the top of your script.

library(FedData)

library(sf)

library(sp)

library(USAboundaries)

library(raster)Just like elevation or any other raster, we can download just for the area of our study. See below for our Iowa example. You would simply replace with your own sf data and nickname.

If you want forest canopy or concrete areas see the get_nlcd helpfile.

First, let’s look at what the data means

landcover_legend <- nlcd_colors()

landcover_legend## # A tibble: 20 × 4

## ID Class Color Description

## <dbl> <chr> <chr> <chr>

## 1 11 Open Water #5475A8 Areas of open water, generally wi…

## 2 12 Perennial Ice/Snow #FFFFFF Areas characterized by a perennia…

## 3 21 Developed, Open Space #E8D1D1 Areas with a mixture of some cons…

## 4 22 Developed, Low Intensity #E29E8C Areas with a mixture of construct…

## 5 23 Developed, Medium Intensity #ff0000 Areas with a mixture of construct…

## 6 24 Developed High Intensity #B50000 Highly developed areas where peop…

## 7 31 Barren Land (Rock/Sand/Clay) #D2CDC0 Areas of bedrock, desert pavement…

## 8 41 Deciduous Forest #85C77E Areas dominated by trees generall…

## 9 42 Evergreen Forest #38814E Areas dominated by trees generall…

## 10 43 Mixed Forest #D4E7B0 Areas dominated by trees generall…

## 11 51 Dwarf Scrub #AF963C Alaska only areas dominated by sh…

## 12 52 Shrub/Scrub #DCCA8F Areas dominated by shrubs; less t…

## 13 71 Grassland/Herbaceous #FDE9AA Areas dominated by gramanoid or h…

## 14 72 Sedge/Herbaceous #D1D182 Alaska only areas dominated by se…

## 15 73 Lichens #A3CC51 Alaska only areas dominated by fr…

## 16 74 Moss #82BA9E Alaska only areas dominated by mo…

## 17 81 Pasture/Hay #FBF65D Areas of grasses, legumes, or gra…

## 18 82 Cultivated Crops #CA9146 Areas used for the production of …

## 19 90 Woody Wetlands #C8E6F8 Areas where forest or shrubland v…

## 20 95 Emergent Herbaceous Wetlands #64B3D5 Areas where perennial herbaceous …# THIS TAKE A LONG TIME TO RUN!

# IT WILL CRASH THE CLOUD. DR G or SUSAN CAN DOWNLOAD IT FOR YOU

# Here I downloaded land cover for the border of my SVI data. You can choose the nickname

NLCD_SVI <- get_nlcd(template = SVI_county.sf,year = 2011,label = "Iowa SVI landcover")

# here I downloaded land cover for the area around my farmers markets (R doesn't understand this is Iowa!)

NLCD_farm <- get_nlcd(template = IA_farmersmarket.sf,year = 2011,label = "Iowa farmers landcover")

# here I downloaded land cover for the border of Iowa itself using my counties

NLCD_IA <- get_nlcd(template = border.county.IA.sf,year = 2011,label = "Iowa landcover")Because it takes long to download large areas, it is better to save the landcover file to your project folder and load it from there instead of downloading it every time you knit your project.

#Save the landcover dataset to your project folder.

writeRaster(NLCD_IA,

filename = "IA_Landcover.tif",

format="GTiff", overwrite=TRUE)After you have successfully downloaded and saved the data to you project folder, you can load it into R using the following code.

If you can load the data successfully, you now do not need any of the above code which is basically for downloading and saving the file. I suggest commenting it out and hiding it in an “include=FALSE, eval-FALSE” code chunk so that you do not lose the code in the event you have to rerun.

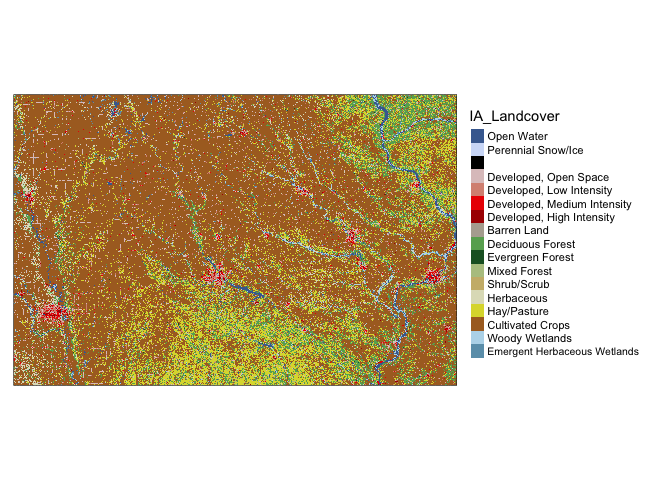

IA_Landcover <- raster('IA_Landcover.tif')

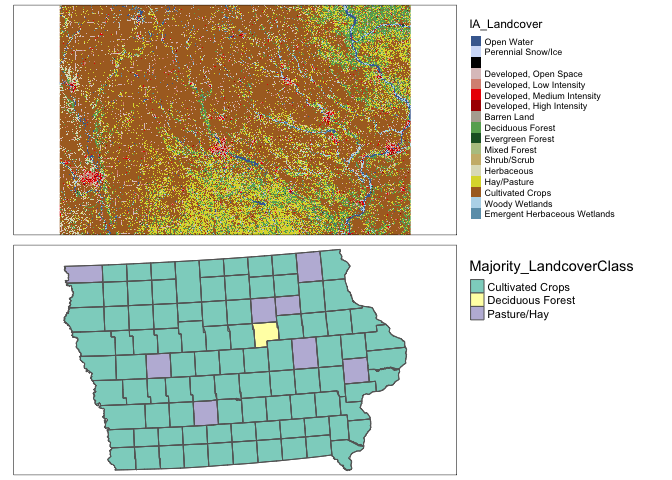

tmap_mode("plot")## tmap mode set to plottingqtm(IA_Landcover)+tm_layout(legend.outside = TRUE)## stars_proxy object shown at 1235 by 811 cells.## Warning: Duplicated levels found. They have been omitted## Some legend labels were too wide. These labels have been resized to 0.65. Increase legend.width (argument of tm_layout) to make the legend wider and therefore the labels larger.

Adding land cover data to your points dataset

Make sure that library(raster) is in your library code chunk at the top.

For point data, we choose simply the extract function. Here I also added in the key - because the number isn’t very informative.

#--------------------------------------------------------------

# Turn everything to the same map projection

#--------------------------------------------------------------

IA_farmersmarket.sf <- st_transform(IA_farmersmarket.sf,crs(IA_Landcover))

#--------------------------------------------------------------

# add to point data

#--------------------------------------------------------------

IA_farmersmarket.sf$LandcoverNumber <- raster::extract(IA_Landcover,

IA_farmersmarket.sf)

IA_farmersmarket.sf$LandcoverNumber <- as.factor(IA_farmersmarket.sf$LandcoverNumber)

#--------------------------------------------------------------

# for the landcover data specifically, this might be better

#--------------------------------------------------------------

key <- nlcd_colors()[,c("ID","Class")]

names(key) <- c("LandcoverNumber","LandcoverClass")

IA_farmersmarket.sf <- merge(IA_farmersmarket.sf ,key,by="LandcoverNumber",all.x=TRUE,all.y=FALSE)

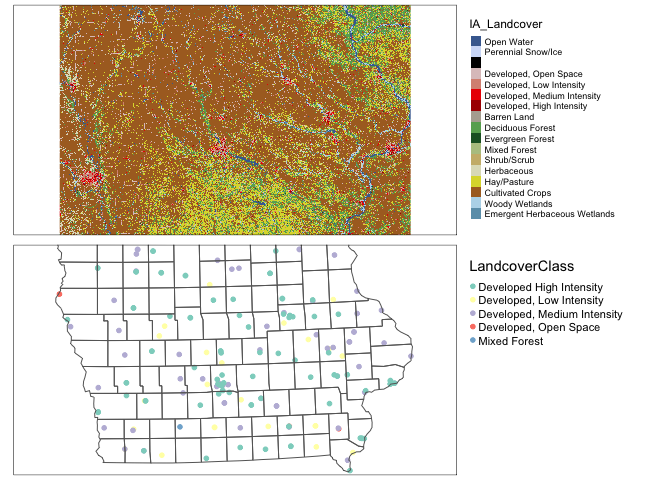

IA_farmersmarket.sf$LandcoverClass <- as.factor(IA_farmersmarket.sf$LandcoverClass )map1 <- qtm(IA_Landcover)+tm_layout(legend.outside = TRUE)

map2 <- qtm(IA_farmersmarket.sf,dots.col="LandcoverClass",dots.size=.1)+

tm_shape(border.county.IA.sf)+tm_polygons(alpha=0)+

tm_layout(legend.outside = TRUE)

tmap_arrange(map1,map2)## stars_proxy object shown at 1235 by 811 cells.## Warning: Duplicated levels found. They have been omitted## stars_proxy object shown at 1235 by 811 cells.## Warning: Duplicated levels found. They have been omitted

rm(map1);rm(map2)Adding land cover data to your polygons dataset

For polygons it’s even more complex because the “mean” landcover doesn’t make sense! I guess in that we want to choose the land-cover with the greatest area in your county.

# This is taking the most common value in each polygon

# add to polygon data

extractedvalues <- data.frame(ID=exact_extract(NLCD_SVI,SVI_county.sf,fun="majority"))

# add in codes

Newvalues <- merge(extractedvalues,nlcd_colors(),by="ID",all.x=TRUE,all.y=FALSE)

# and extract the codes

SVI_county.sf$Majority_LandcoverClass <- as.factor(Newvalues$Class)map1 <- qtm(IA_Landcover)+tm_layout(legend.outside = TRUE)

map2 <- qtm(SVI_county.sf,"Majority_LandcoverClass")+

tm_shape(border.county.IA.sf)+tm_polygons(alpha=0)+

tm_layout(legend.outside = TRUE)

tmap_arrange(map1,map2,nrow=2)## stars_proxy object shown at 1235 by 811 cells.## Warning: Duplicated levels found. They have been omitted

rm(map1);rm(map2)Weather data

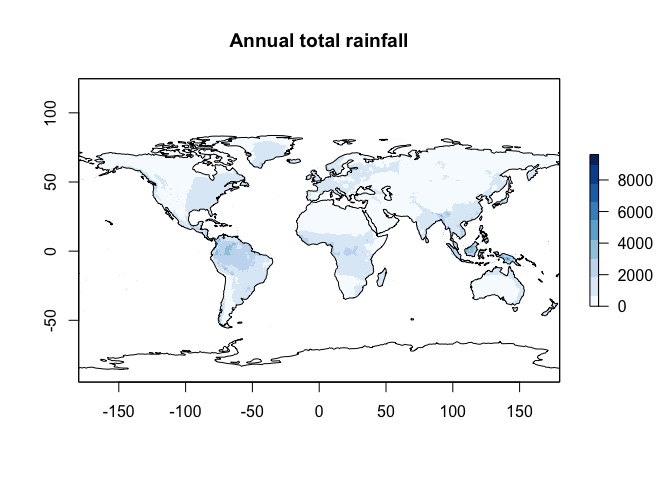

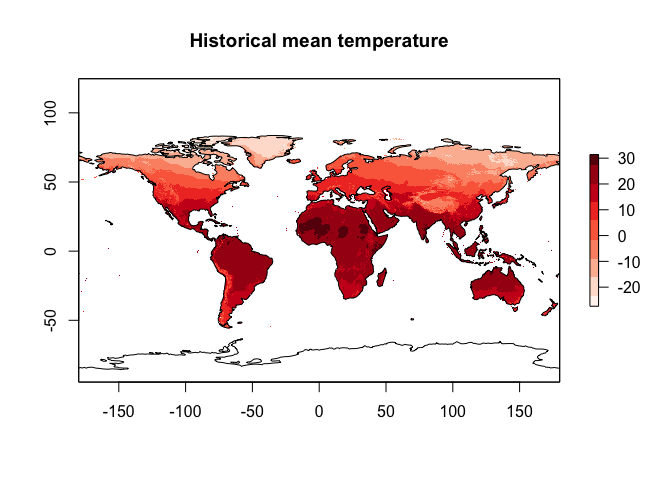

WorldClim Temperature, Rainfall

We can get weather data from worldclim. There is an excellent tutorial here: https://bedatablog.netlify.app/post/download-and-illustrate-current-and-projected-climate-in-r/

Make sure thatlibrary(raster) is in your library code chunk at the top.

See here for details: https://www.worldclim.org/data/worldclim21.html or look at ?raster::getData. Valid variables names are

- ‘tmin’: Monthly minimum temperature

- ‘tmax’: Monthly maximum temperature

- ‘prec’: Monthly rainfall

- and ‘bio’ (https://www.worldclim.org/data/bioclim.html), which downloads a list of:

- BIO1 = Annual Mean Temperature

- BIO2 = Mean Diurnal Range (Mean of monthly (max temp - min temp))

- BIO3 = Isothermality (BIO2/BIO7) (×100)

- BIO4 = Temperature Seasonality (standard deviation ×100)

- BIO5 = Max Temperature of Warmest Month

- BIO6 = Min Temperature of Coldest Month

- BIO7 = Temperature Annual Range (BIO5-BIO6)

- BIO8 = Mean Temperature of Wettest Quarter

- BIO9 = Mean Temperature of Driest Quarter

- BIO10 = Mean Temperature of Warmest Quarter

- BIO11 = Mean Temperature of Coldest Quarter

- BIO12 = Annual Precipitation

- BIO13 = Precipitation of Wettest Month

- BIO14 = Precipitation of Driest Month

- BIO15 = Precipitation Seasonality (Coefficient of Variation)

- BIO16 = Precipitation of Wettest Quarter

- BIO17 = Precipitation of Driest Quarter

- BIO18 = Precipitation of Warmest Quarter

- BIO19 = Precipitation of Coldest Quarter

Downloading WorldClim data

OPTION1: Download the data separately from https://www.worldclim.org/data/worldclim21.html, save it to your Project folder, then read it in using the raster() command.

OPTION2: We can automatically download the data to your computer using raster::getData . You can also download monthly historical weather data or future climate model output - see the getData helpfile for those. I am choosing to just look at the bioclim averages.

Remember to add results = FALSE in your code chunk options

# THIS IS 123 MEGABYTES! If that is too big for your computer, tell Dr Greatrex and she will subset for you.

worldclim.BIO <- raster::getData(name = 'worldclim', var = 'bio', res = 2.5)To choose an individual BIO variable (see the list above), we use double [[ ]]

#------------------------------------------------

# Average annual temp

#------------------------------------------------

worldclim.Annual.Mean.Temperature <- worldclim.BIO$bio1

# For some reason Temperature is stored in degrees C *10 , so

worldclim.Annual.Mean.Temperature <- worldclim.Annual.Mean.Temperature/10

#------------------------------------------------

# Rain see above for the codes

#----------------------------------------------

worldclim.Annual.Mean.Rain <- worldclim.BIO$bio12# change the projection of the coastline data to match

coast.world.sf <- st_transform(coast.world.sf,crs(worldclim.Annual.Mean.Rain))

# Tmap hatesr

plot(worldclim.Annual.Mean.Rain,

col = RColorBrewer::brewer.pal(9, "Blues"),

main="Annual total rainfall")

plot(st_geometry(coast.world.sf),add=TRUE)

plot(worldclim.Annual.Mean.Temperature,

col = RColorBrewer::brewer.pal(9, "Reds"),

main="Historical mean temperature")

plot(st_geometry(coast.world.sf),add=TRUE)

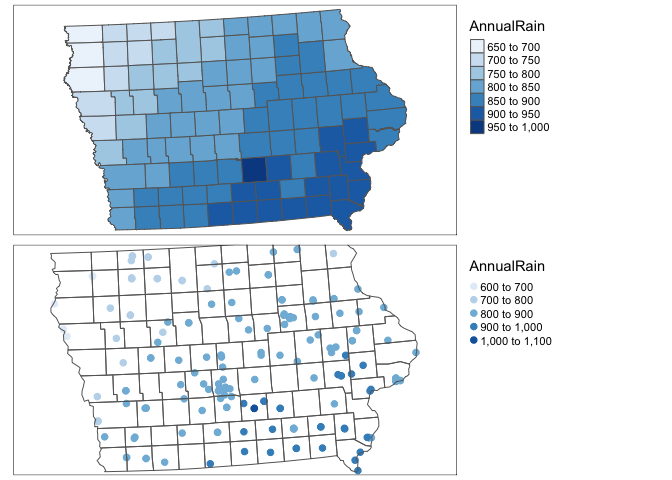

Adding WorldClim to your datasets

This is exactly the same as for your elevation data. Make sure that library(exactextractr) and library(raster) are in your library code chunk at the top.

We can simply use this package to extract the value at our locations - I’m choosing the average over the polygon. To extract

# change projections to match

SVI_county.sf <- st_transform(SVI_county.sf,4326)

# add to polygon data

SVI_county.sf$AnnualRain <- exact_extract(worldclim.Annual.Mean.Rain,

SVI_county.sf,"mean")

SVI_county.sf$AnnualMean_temp <- exact_extract(worldclim.Annual.Mean.Temperature,

SVI_county.sf,"mean")

# change projection back

SVI_county.sf <- st_transform(SVI_county.sf,3744)For point data, we choose simply the extract function.

# change projection

IA_farmersmarket.sf <- st_transform(IA_farmersmarket.sf,4326)

# add to point data

IA_farmersmarket.sf$AnnualRain <- raster::extract(worldclim.Annual.Mean.Rain,

IA_farmersmarket.sf)

IA_farmersmarket.sf$AnnualMean_temp <- raster::extract(worldclim.Annual.Mean.Temperature,IA_farmersmarket.sf)

# change projection back

IA_farmersmarket.sf <- st_transform(IA_farmersmarket.sf,3744)Click on the name of your dataset, you will see there is a new column called AnnualRain and AnnualMean_temp. Here is what we have done

# plot 1

map1 <- qtm(SVI_county.sf,"AnnualRain",fill.palette="Blues")+tm_layout(legend.outside = TRUE)

map2 <- qtm(IA_farmersmarket.sf,dots.col="AnnualRain",dots.size=.2,dots.palette="Blues")+

tm_shape(border.county.IA.sf)+

tm_polygons(alpha=0)+

tm_layout(legend.outside = TRUE)

tmap_arrange(map1,map2)

rm(map1);rm(map2)# plot 1

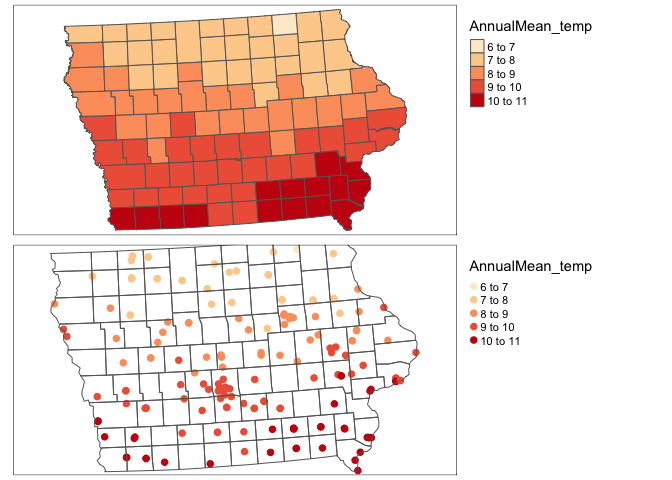

map1 <- qtm(SVI_county.sf,"AnnualMean_temp",fill.palette="OrRd")+tm_layout(legend.outside = TRUE)

map2 <- qtm(IA_farmersmarket.sf,dots.col="AnnualMean_temp",dots.size=.2,dots.palette="OrRd")+

tm_shape(border.county.IA.sf)+

tm_polygons(alpha=0)+

tm_layout(legend.outside = TRUE)

tmap_arrange(map1,map2)

rm(map1);rm(map2)Maximum Windspeed

You will need to install the rWind package (note the capital W) and the lubridate. Here is a wonderful tutorial

https://www.r-bloggers.com/2018/11/plotting-wind-highways-using-rwind/

Remember to put results=FALSE in the code chunk options.

library(rWind)

library(lubridate) ##

## Attaching package: 'lubridate'## The following objects are masked from 'package:raster':

##

## intersect, union## The following objects are masked from 'package:base':

##

## date, intersect, setdiff, union library(dplyr) ##

## Attaching package: 'dplyr'## The following objects are masked from 'package:raster':

##

## intersect, select, union## The following objects are masked from 'package:stats':

##

## filter, lag## The following objects are masked from 'package:base':

##

## intersect, setdiff, setequal, union# Here, we use ymd_hms from lubridate package to create a sequence of dates

# Given this is just a class project,

# JUST CHOOSE 1 MONTH

startdate <- ymd_hms(paste(2018,6,1,12,00,00, sep="-"))

enddate <- ymd_hms(paste(2018,6,30,12,00,00, sep="-"))

TimeSeries <- seq(startdate, enddate,by="1 days")

# This is my box over the Eastern US Coast

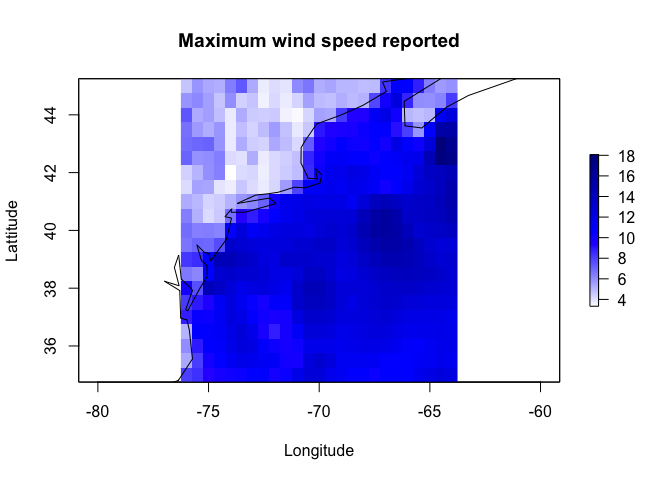

Windspeed <- wind.dl_2(TimeSeries,-76,-64,35,45)

# Tidy the data

tidy_Windspeed <- tidy(Windspeed)

max_Windspeed <- tidy_Windspeed %>%

dplyr::group_by(lat, lon) %>%

dplyr::summarise(speed = max(speed)) ## `summarise()` has grouped output by 'lat'. You can override using the `.groups` argument.max_Windspeed <- cbind(max_Windspeed$lon,

max_Windspeed$lat,

max_Windspeed$speed)

max_Windspeed.raster <- rasterFromXYZ(max_Windspeed)

acol <- colorRampPalette(c("white", "blue", "darkblue"))

plot(max_Windspeed.raster, col=acol(1000),

main= "Maximum wind speed reported",

xlab="Longitude", ylab="Lattitude")

plot(st_geometry(coast.world.sf),add=TRUE)

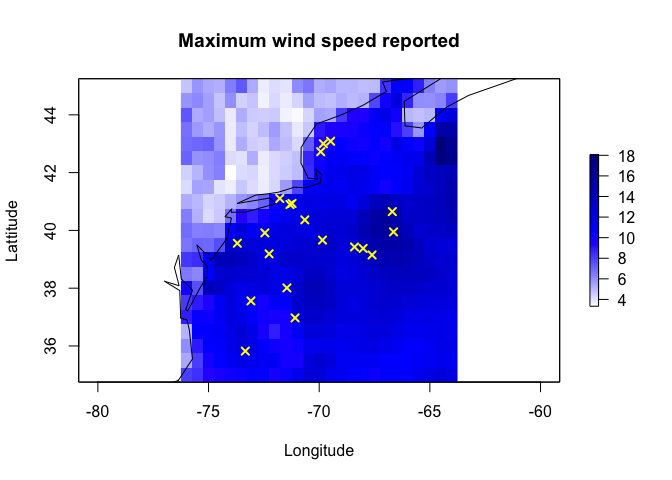

and let’s extract for our points. I’m just going to make a random set of points

RandomData <- data.frame(x=rnorm(20,mean=-70,sd=2),

y=rnorm(20,mean=40,sd=2))

RandomData.sf <- st_as_sf(RandomData,coords=c("x","y"),crs=4326)

plot(max_Windspeed.raster, col=acol(1000),

main= "Maximum wind speed reported",

xlab="Longitude", ylab="Lattitude")

plot(st_geometry(coast.world.sf),add=TRUE)

plot(st_geometry(RandomData.sf),col="yellow",add=TRUE,pch=4,lwd=2)

RandomData.sf$Wind <- raster::extract(max_Windspeed.raster,RandomData.sf)

tmap_mode("view")## tmap mode set to interactive viewingqtm(RandomData.sf,dots.col="Wind",dots.size=.2)Additional data sources

there is a great tutorial here I haven’t explored https://mikejohnson51.github.io/climateR-intro/#98

OCEANS

Coastlines

See borders section

Windspeed

See weather data section.

Ocean floor depth

A great tutorial here: https://cran.r-project.org/web/packages/marmap/vignettes/marmap-DataAnalysis.pdf

You will need to install the marmap library. Remember to add results = FALSE in the code chunk options

library(marmap)## Registered S3 methods overwritten by 'adehabitatMA':

## method from

## print.SpatialPixelsDataFrame sp

## print.SpatialPixels sp##

## Attaching package: 'marmap'## The following object is masked from 'package:raster':

##

## as.raster## The following object is masked from 'package:grDevices':

##

## as.raster# choose your lon/lat box - I chose mine to be just off the Eastern US coastline

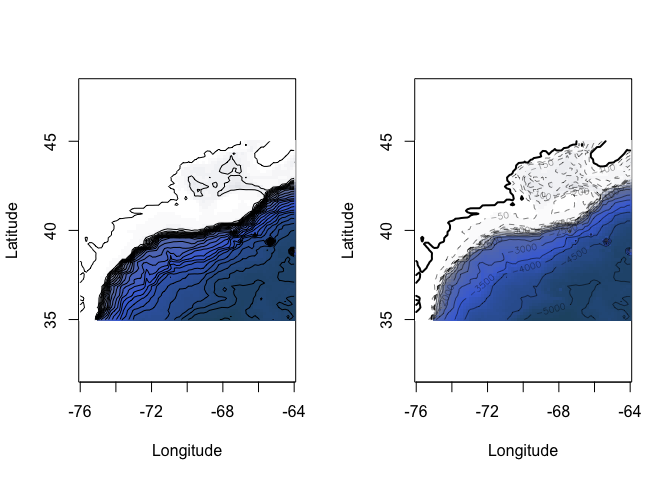

oceandepth <- getNOAA.bathy(lon1 = -76, lon2 = -64,

lat1 = 35, lat2 = 45, resolution = 10)## Querying NOAA database ...## This may take seconds to minutes, depending on grid size## Building bathy matrix ...# and plot

par(mfrow=c(1,2))

plot(oceandepth, image=TRUE)

plot(oceandepth, image=TRUE, deep=c(-6000,-300,0),

shallow=c(-300,-10,0),

step=c(500,50,0),

lwd=c(0.5,0.5,2),

lty=c(1,2,1),

drawlabels=c(TRUE,TRUE,FALSE))

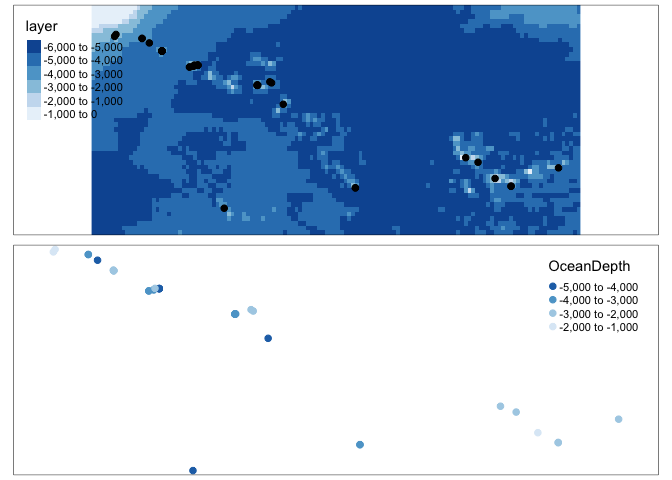

Now let’s extract the values at our point locations. I need some ocean points! I’m going to use the metallo dataset which shows coral samples over the atlantic.

library(marmap)

# get some data

data("metallo")

coraldata <- metallo

coraldata.sf <- st_as_sf(coraldata,coords=c("lon","lat"))

# make a box a little bigger than our area. There are more elegant ways of doing this.

lon1 <- sign(extent(coraldata.sf)@xmin)*abs(extent(coraldata.sf)@xmin)-1

lon2 <- sign(extent(coraldata.sf)@xmax)*abs(extent(coraldata.sf)@xmax)+1

lat1 <- sign(extent(coraldata.sf)@ymin)*abs(extent(coraldata.sf)@ymin)-1

lat2 <- sign(extent(coraldata.sf)@ymax)*abs(extent(coraldata.sf)@ymax)+1

oceandepth_coral <- getNOAA.bathy(lon1, lon2, lat1, lat2, resolution = 10)## Querying NOAA database ...## This may take seconds to minutes, depending on grid size## Building bathy matrix ...oceandepth_coral.raster <- as.raster(oceandepth_coral)

coraldata.sf$OceanDepth <- raster::extract(oceandepth_coral.raster,coraldata.sf)

# and plot

map1 <- tm_shape(oceandepth_coral.raster)+tm_raster(palette="Blues") +

tm_shape(coraldata.sf)+tm_dots(size=.2)

map2 <- qtm(coraldata.sf,dots.col="OceanDepth",dots.palette="Blues",dots.size=.2)

tmap_mode("plot")## tmap mode set to plottingtmap_arrange(map1,map2)## Warning: Currect projection of shape coraldata.sf unknown. Long-lat (WGS84) is

## assumed.

## Warning: Currect projection of shape coraldata.sf unknown. Long-lat (WGS84) is

## assumed.

## Warning: Currect projection of shape coraldata.sf unknown. Long-lat (WGS84) is

## assumed.

## Warning: Currect projection of shape coraldata.sf unknown. Long-lat (WGS84) is

## assumed.

rm(map1);rm(map2)Sea Surface Temperature Raw

Here’s the tutorial. Happy to help folks work through it in labs

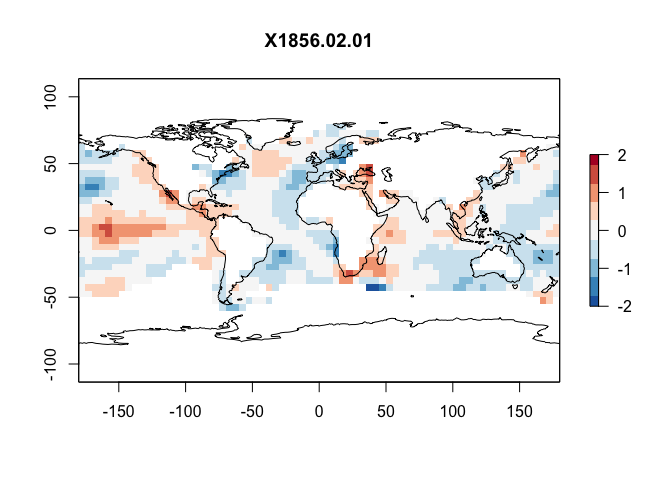

Sea Surface Temperature CHANGE

Go here and download this dataset (the .nc file) into your project folder https://psl.noaa.gov/data/gridded/data.kaplan_sst.html.

This is a netcdf file and we can read it in using the raster package and the stack command, It contains the anomaly (e.g. colder/hotter than average for each historical month)

# rotate shifts the long from 0-360 to -180:180

SST_anom <- rotate(stack("sst.mon.anom.nc"))

# change the coastline data (downloaded in borders) to have the same projection

coast.world.sf <- st_transform(coast.world.sf,crs(SST_anom))

#For example make a plot

layer <- 2

plot(SST_anom[[layer]],zlim=c(-2,2),

col = rev(RColorBrewer::brewer.pal(9, "RdBu")),

main=names(SST_anom[[layer]]))

plot(st_geometry(coast.world.sf),add=TRUE)

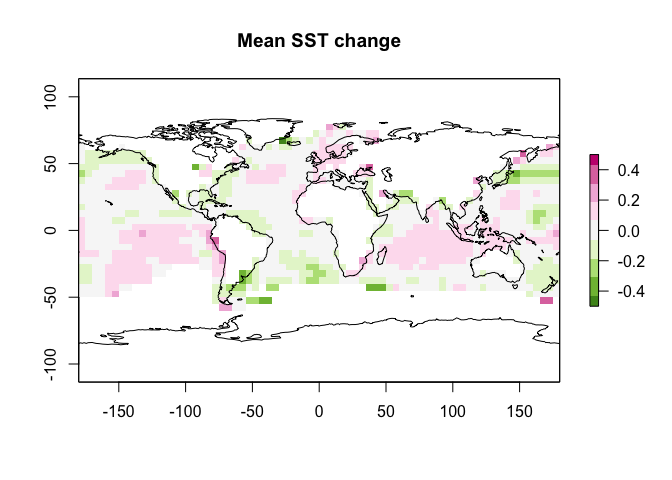

But we want the average change, so we take the mean

meanSST.Change <- mean(SST_anom)

plot(meanSST.Change,zlim=c(-.5,.5),

col = rev(RColorBrewer::brewer.pal(9, "PiYG")),

main="Mean SST change")

plot(st_geometry(coast.world.sf),add=TRUE)

and let’s extract for our coral points

coraldata.sf$SSTChange <- raster::extract(meanSST.Change,coraldata.sf)

tmap_mode("view")## tmap mode set to interactive viewingqtm(coraldata.sf,dots.col="SSTChange",dots.size=.2,dots.palette="PiYG")## Warning: Currect projection of shape coraldata.sf unknown. Long-lat (WGS84) is

## assumed.## Variable(s) "SSTChange" contains positive and negative values, so midpoint is set to 0. Set midpoint = NA to show the full spectrum of the color palette.