FINAL PROJECT EXAMPLE: Movie Ratings

Introduction

In the film industry, predictive modeling and machine learning are increasingly used to help studios make data-driven decisions and predict the success of films. From forecasting box office revenue to optimizing marketing campaigns, these models provide insights that can significantly influence a film’s profitability. Additionally, machine learning algorithms can analyze viewer preferences and behaviors, enabling studios to tailor content to specific audiences.

A key data source for the film industry is the Rotten Tomatoes website, an online aggregator that compiles critic and audience reviews into a “Tomatometer” score. This score, distinguishing films as “Fresh” or “Rotten,” heavily influences viewer perceptions and can drive box office success, as many potential viewers consult these ratings before choosing to see a film.

The aim is to assess how different factors, including the rotten tomatoes score, influences the domestic gross profit of a MAINSTREAM film (not a pilot run) for Lion Studios, using a training dataset based on 116 movies released in 2011. This dataset includes the following variables.

| Variable Name | Description |

|---|---|

| Name | Film Name |

| DomesticGross (million USD) | Gross revenue in the US by the end of 2011, in millions of dollars |

| RottenTomatoes (%) | Percentage rating from critics reviews on Rotten Tomatoes |

| AudienceScore (%) | Percentage audience rating from opening weekend surveys |

| TheatersOpenWeek | Number of cinemas showing the movie on opening weekend. Films that were shown in less than 1000 cinemas are considered ‘pilot/test’ showings that should not be included in the analysis |

| BOAverageOpenWeek (USD) | Average box office revenue per theater opening weekend, in dollars |

| Profitability (categorical) |

Loss: The film cost more to make than it gained as profit. Average: 100-300% of the budget spent was gained as profit. High: Greater than 300% of the budget spent was gained as profit. |

A sample of the data can be seen here.

head(as.data.frame(movies))## Name DomesticGross RottenTomatoes AudienceScore

## 1 The Dilemma 0.03 6 53

## 2 Hanna 0.97 62 57

## 3 Friends With Benefits 4.40 66 55

## 4 Scream 4 4.46 46 66

## 5 Dolphin Tale 5.31 83 84

## 6 One Day 6.93 28 46

## TheatersOpenWeek BOAverageOpenWeek Profitability

## 1 3 2972 Loss

## 2 22 4890 Loss

## 3 106 6111 Loss

## 4 265 3856 Loss

## 5 247 7174 Loss

## 6 2003 1730 LossExploratory data analysis

The training data for this project includes information on 116 movies released in 2011:

Object of analysis: An individual film

Population: All films released by Lion Studios from 2011-present

Response variable: DomesticGross (millions of USD)

It is difficult in this case to assess whether the dataset is truly representative of the film industry now. With the rise of digital technology, streaming and COVID, the situation at present may look very different. Equally, there have been concerns about whether rotten tomatoes is gender neutral (as many more men than women appear to vote, further skewing the results towards films men are likely to watch). That said, this is the data available for this study, so we shall continue as requested.

Data wrangling

## Name DomesticGross RottenTomatoes AudienceScore

## Length:117 Min. : 0.03 Min. : 4.00 Min. :25.00

## Class :character 1st Qu.: 24.82 1st Qu.:30.00 1st Qu.:49.00

## Mode :character Median : 43.85 Median :49.00 Median :59.00

## Mean : 71.57 Mean :52.45 Mean :61.44

## 3rd Qu.: 98.80 3rd Qu.:76.00 3rd Qu.:76.00

## Max. :381.01 Max. :97.00 Max. :93.00

## TheatersOpenWeek BOAverageOpenWeek Profitability

## Min. : 3 Min. : 1513 Average:58

## 1st Qu.:2555 1st Qu.: 3782 High :40

## Median :2996 Median : 5715 Loss :19

## Mean :2839 Mean : 8407

## 3rd Qu.:3417 3rd Qu.: 8995

## Max. :4375 Max. :93230The studio (Dr G), asked for me to only assess mainstream movies rather than pilot or test showings. They defined this as films shown in over 1000 theatres in their opening week and it is clear from the summary that there are pilot films in the dataset. This means I am filtering down to the correct,mainstream-film sub-set.

movies <- filter(movies,TheatersOpenWeek > 1000)Response Variable Analysis

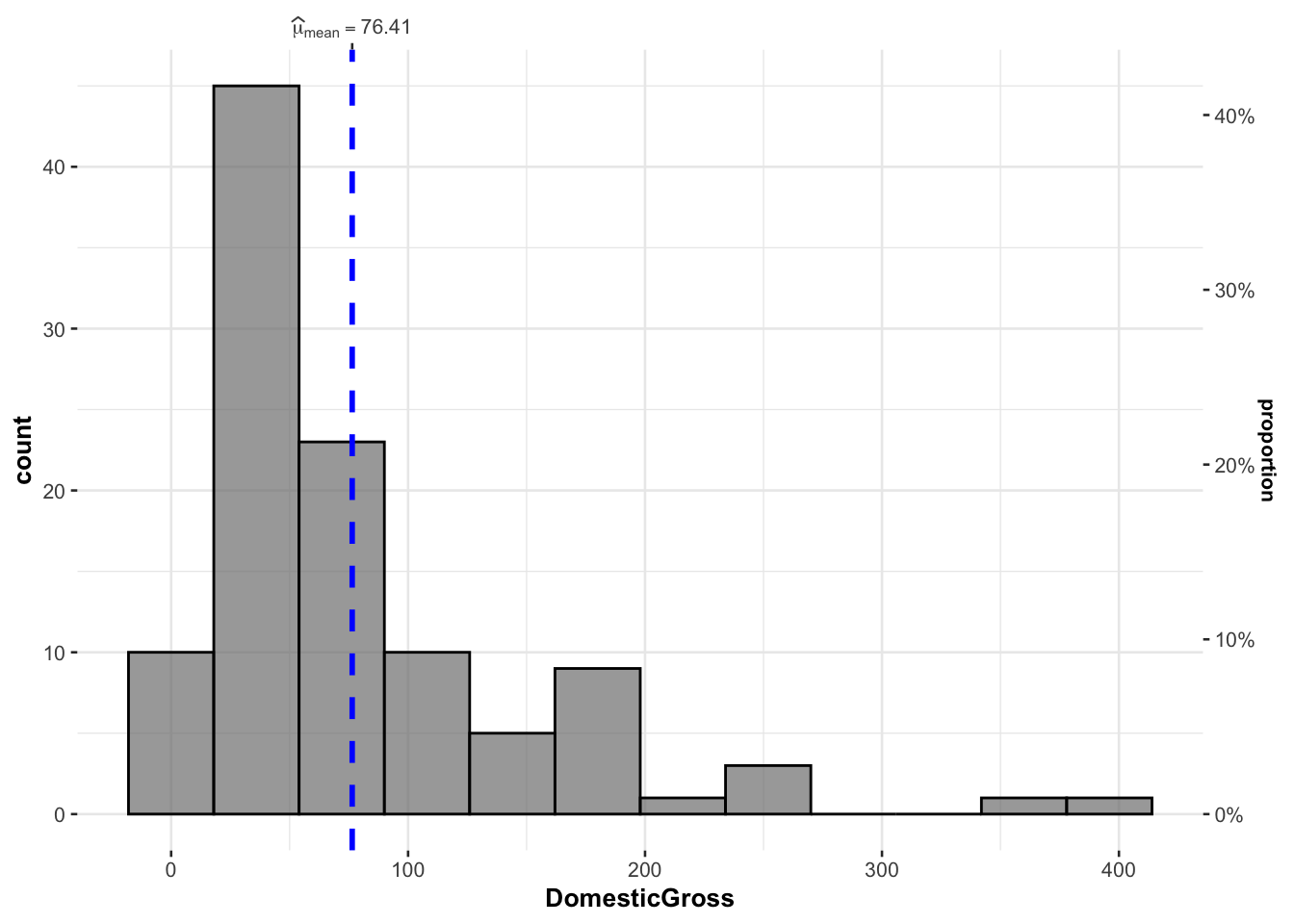

gghistostats(DomesticGross,data=movies,results.subtitle=FALSE)

As shown in the summary above, the domestic gross of films in the sample ranges from $400,000 (Friends with Benefits) to $381 million USD (Harry Potter and the Deathly Hallows II) with a mean domestic gross profit of $76.41 million and a median value of $51.93 million USD. As shown in the histogram, the data is highly skewed, with the films Harry Potter and 30 Minutes or Less making substantially more money than other films released in 2011.

Correlation Matrix

Correlation matrices are a quick and easy way of assessing whether there is a linear relationship between many sets of variables. However it is important to note that it will only “see” linear relationships, a complex pattern such as a curve will not be reflected in the statistics.

# remove the name column and the factor column

movies.numeric <- movies[ , sapply(movies,is.numeric)]

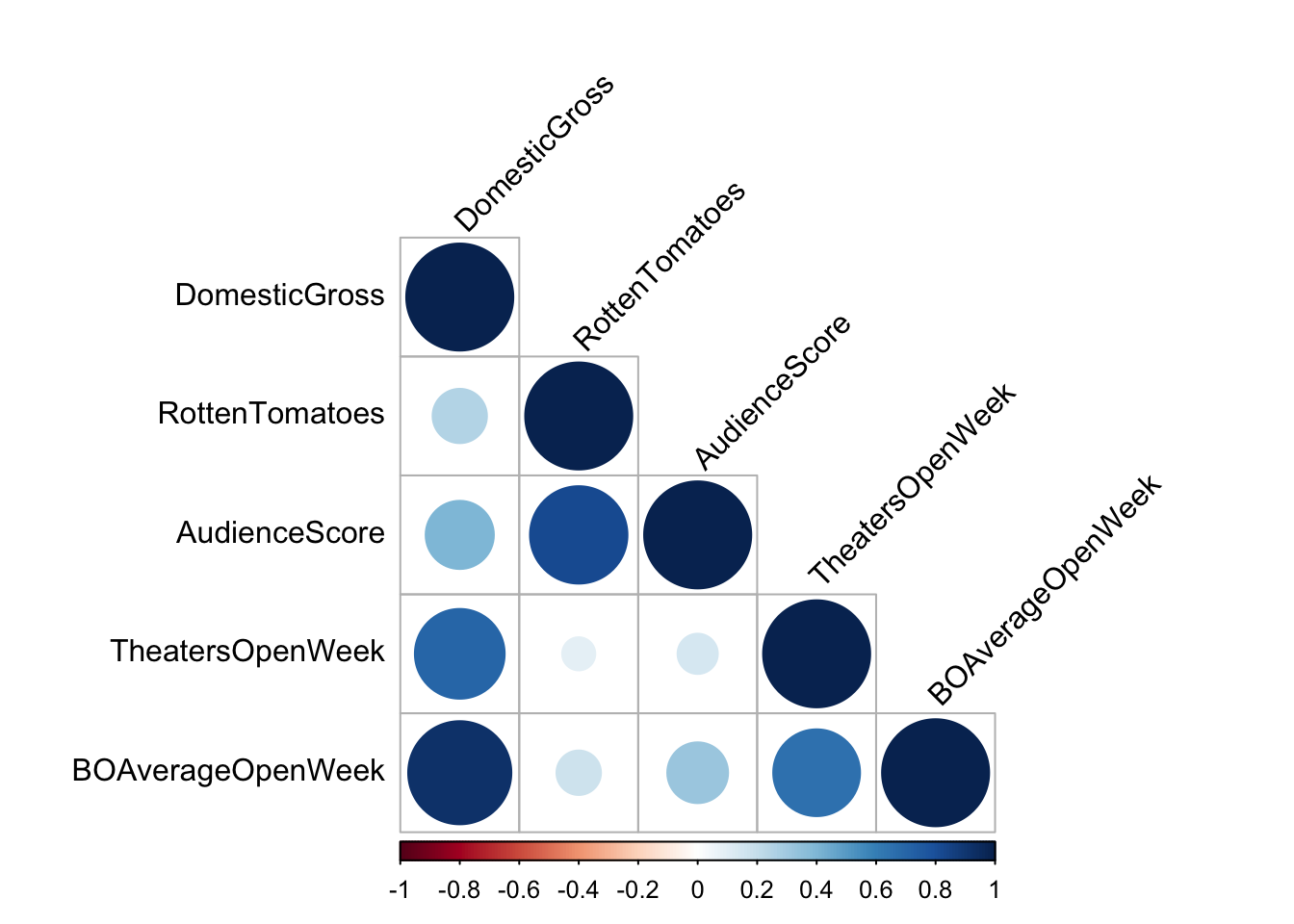

corrplot(cor(movies.numeric), type = "lower", tl.col = "black", tl.srt = 45)

The matrix suggests a strong relationship between the domestic gross and almost all the other scores provided, especially the number of theaters showing the film in its opening week. We can examine this in more detail (and include the profitability categorical variable) through looking at the individual scatterplots.

# remove the names variable

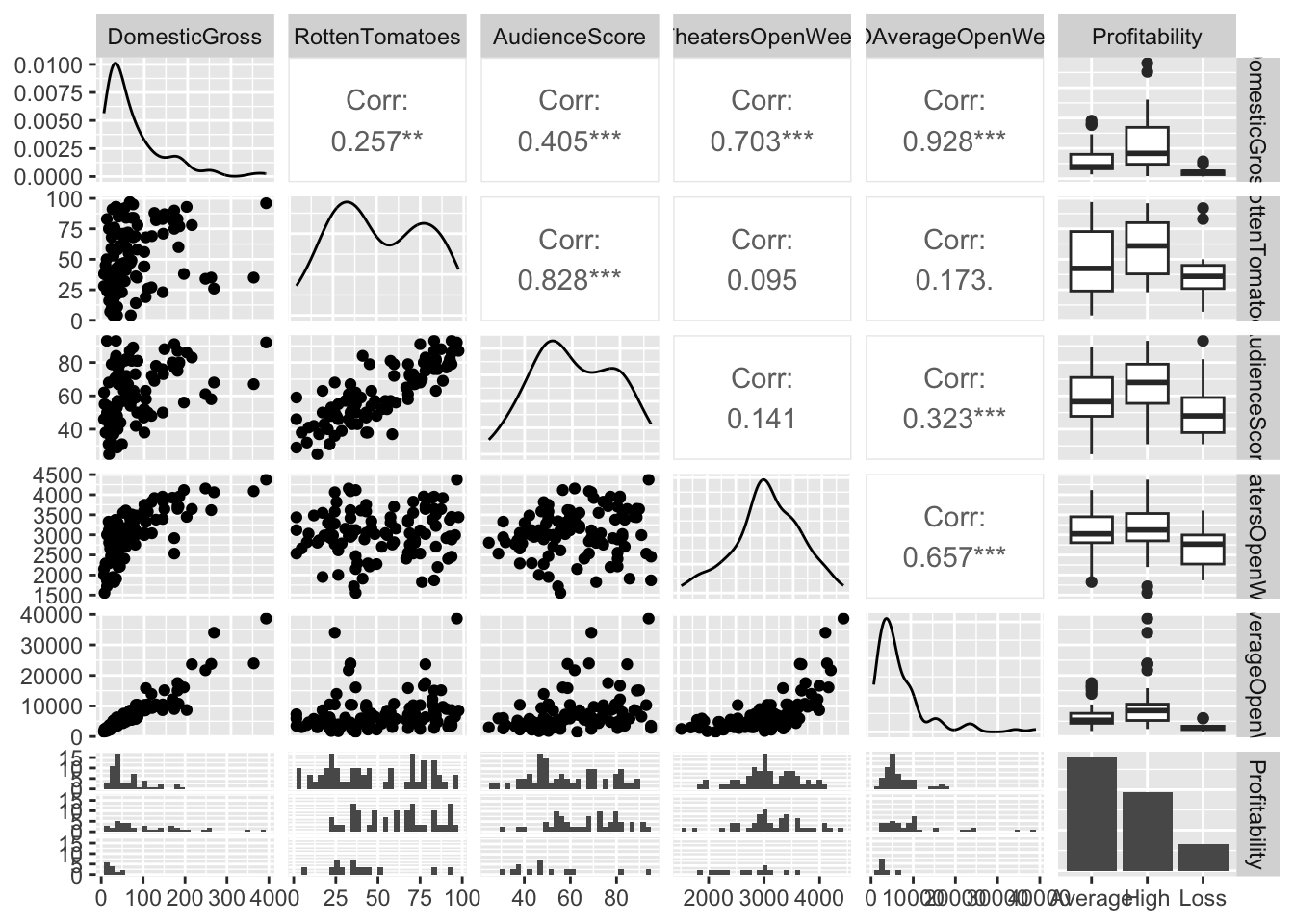

ggpairs(movies[,-1])

Here we can see that, for most predictors, there is a somewhat complex relationship with domestic gross profits. It seems that profitability also might play a role.

Single Predictor models

As requested, we shall now compute and compare two single predictor, simple linear regression models. Also as requested, I have chosen two predictor variables I consider to be ‘interesting’ from my summary analysis above:

The number of theaters showing the film in its opening week (

TheatersOpenWeek). I chose this because it has the highest correlation out of all the predictor variables.Average box office revenue per theater opening weekend, in dollars (

BOAverageOpenWeek). I chose this because of a reasonably strong positive correlation, and from looking at the ggpairs matrix, the potential that investigating some outliers might increase the predictability further.

Model 1a: TheatersOpenWeek

# Scatterplot

myplot <- ggplot(movies, aes(x=TheatersOpenWeek, y=DomesticGross)) +

geom_point() +

xlab("Number of theaters") + ylab("Domestic Gross Profit")+

geom_smooth(method=lm , color="blue", se=TRUE) +

theme_ipsum()

ggplotly(myplot)

LM_NumTheatres <- lm(DomesticGross ~ TheatersOpenWeek, data = movies)In this case, as shown in the scatterplot above and the summary below, our model suggests that for this sample:

\[ \widehat{DomesticGross} = -189.48250 + 0.08705 (NumberTheatres) \]

LINE assumptions check

# Model Diagnostics

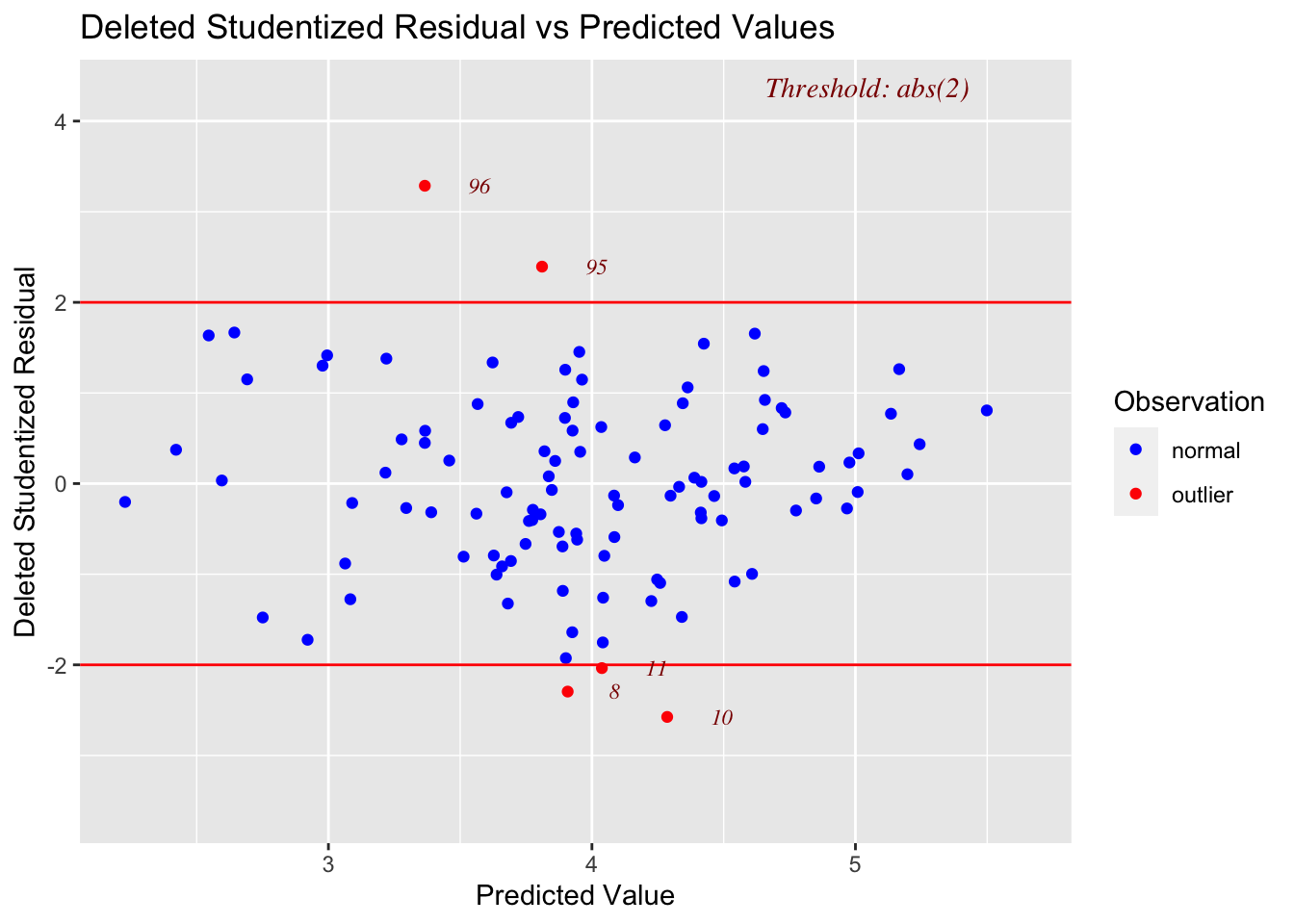

ols_plot_resid_stud_fit(LM_NumTheatres)

- Linearity: FAIL

- Equal variance/normality: probably fail

As shown in the residual plot, this model has clear issues with non-linearity (e.g. a curve would clearly fit better than a line). Therefore I will apply a transformation to fix the issue before moving on.

Transformations

I used the code below to assess several transformations on both the predictor and the response. I first applied some predictor transformation to account for the non-linearity. It became increasingly clear that there was also non equal variance, so I applied a transformation to the response and tried transformations of both.

# Make some transformations

# This code-chunk has been set to eval=FALSE, but you can see what I did

# I don't want to mess up my movie dataset, so I'm trying this on a temporary dataset

tmpmovie <- movies

#===============================

# MAKE TRANSFORMATION COLUMNS

#===============================

# Log

tmpmovie$Log_TheatersOpenWeek <- log(tmpmovie$TheatersOpenWeek)

tmpmovie$Log_DomesticGross <- log(tmpmovie$DomesticGross)

# Sqrt

tmpmovie$Sqrt_TheatersOpenWeek <- sqrt(tmpmovie$TheatersOpenWeek)

tmpmovie$Sqrt_DomesticGross <- sqrt(tmpmovie$DomesticGross)

# Inverse e.g. 1/x

tmpmovie$Inv_TheatersOpenWeek <- 1/(tmpmovie$TheatersOpenWeek)

tmpmovie$Inv_DomesticGross <- 1/(tmpmovie$DomesticGross)

# Boxcox, a more detailed combination of log and 1/x e.g. see equation below

# I chose the 0.222 from the top of the boxcox plot. (NOT IN EXAM)

boxcox_result <- MASS::boxcox(LM_NumTheatres)

tmpmovie$box_TheatersOpenWeek <- (tmpmovie$TheatersOpenWeek^0.222 - 1) / 0.22

#===============================

# TEST SOME MODELS

# I'm just looking at the residual plots to see which works for fixing Linearity & equal variance. I ran each line using contrl-enter. I'm looking for the simplest possible answer that does the job

# PREDICTOR TRANSFORMATIONS.

#===============================

ols_plot_resid_stud_fit(lm(DomesticGross ~ Log_TheatersOpenWeek, data = tmpmovie))

ols_plot_resid_stud_fit(lm(DomesticGross ~ Sqrt_TheatersOpenWeek,data = tmpmovie))

ols_plot_resid_stud_fit(lm(DomesticGross ~ Inv_TheatersOpenWeek, data = tmpmovie))

ols_plot_resid_stud_fit(lm(DomesticGross ~ box_TheatersOpenWeek, data = tmpmovie))

ols_plot_resid_stud_fit(lm(DomesticGross ~ poly(TheatersOpenWeek,2),data = tmpmovie))

ols_plot_resid_stud_fit(lm(DomesticGross ~ poly(TheatersOpenWeek,3),data = tmpmovie))

# RESPONSE TRANSFORMATIONS.

#===============================

ols_plot_resid_stud_fit(lm(Log_DomesticGross ~ TheatersOpenWeek, data = tmpmovie))

ols_plot_resid_stud_fit(lm(Sqrt_DomesticGross ~ TheatersOpenWeek,data = tmpmovie))

ols_plot_resid_stud_fit(lm(Inv_DomesticGross ~ TheatersOpenWeek, data = tmpmovie))

# BOTH TRANSFORMATIONS.

#===============================

ols_plot_resid_stud_fit(lm(Log_DomesticGross~Log_TheatersOpenWeek, data = tmpmovie))

ols_plot_resid_stud_fit(lm(Sqrt_DomesticGross~Sqrt_TheatersOpenWeek,data = tmpmovie))

ols_plot_resid_stud_fit(lm(Inv_DomesticGross~Inv_TheatersOpenWeek, data = tmpmovie))

rm(tmpmovies)There was a clear winner, the log transformation of the response, so I will move forwards from here. This makes sense given how skewed the resonse variable was.

# Log response transformation

movies$Log_DomesticGross <- log(movies$DomesticGross)

ols_plot_resid_stud_fit(lm(Log_DomesticGross ~ TheatersOpenWeek,data=movies))

Model 1B, Log(Domestic_Gross)

Now, let’s assess our new and more complex model:

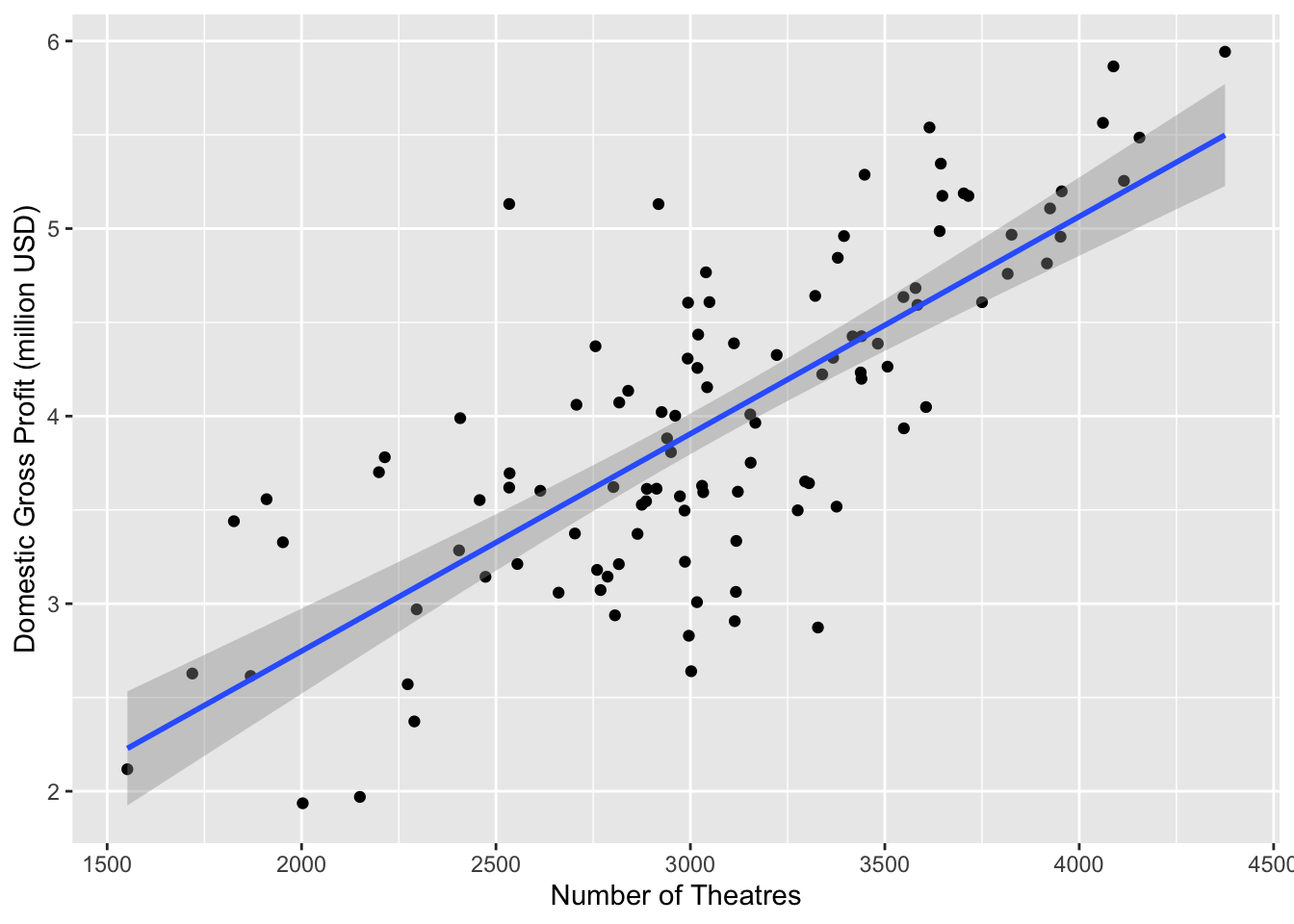

# New scatterplot with theatres open week vs log_domgross

ggplot(movies, aes(x=TheatersOpenWeek, y=Log_DomesticGross)) +

xlab("Number of Theatres") + ylab("Domestic Gross Profit (million USD)")+

geom_point() +

geom_smooth(method="lm")

# linear model

LM_Log_NumTheatres <- lm(Log_DomesticGross ~ TheatersOpenWeek,data=movies)

LM_Log_NumTheatres##

## Call:

## lm(formula = Log_DomesticGross ~ TheatersOpenWeek, data = movies)

##

## Coefficients:

## (Intercept) TheatersOpenWeek

## 0.430433 0.001158In this case, as shown in the scatterplot above and the summary below, our model suggests that for this sample:

\[ \widehat{\ln(DomesticGross)} = 0.430433 + 0.001158(NumberTheatres) \]

LINE assessment

residplot1 <- ols_plot_resid_stud_fit(LM_Log_NumTheatres,print_plot=FALSE)

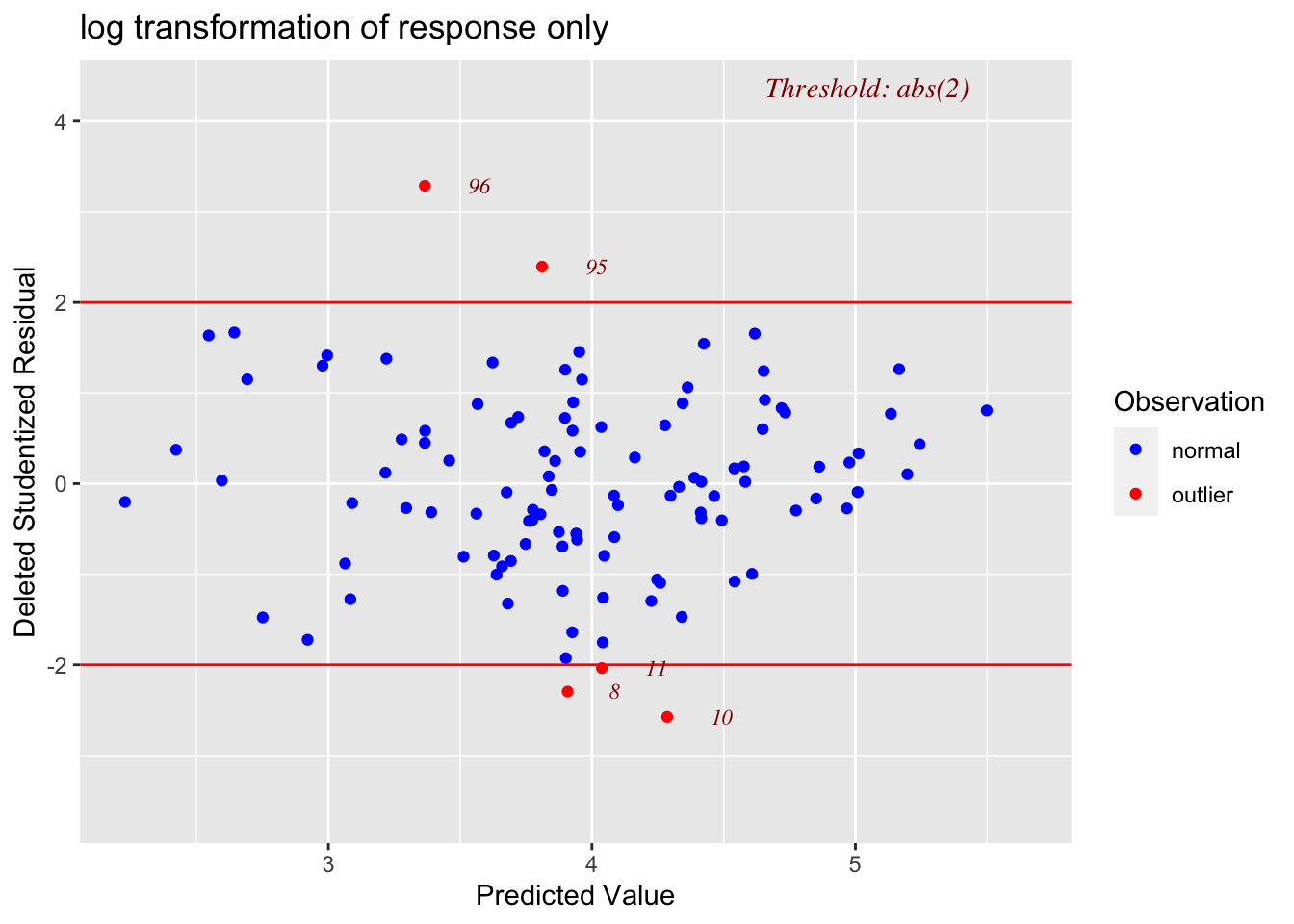

residplot1$plot + ggtitle("log transformation of response only")

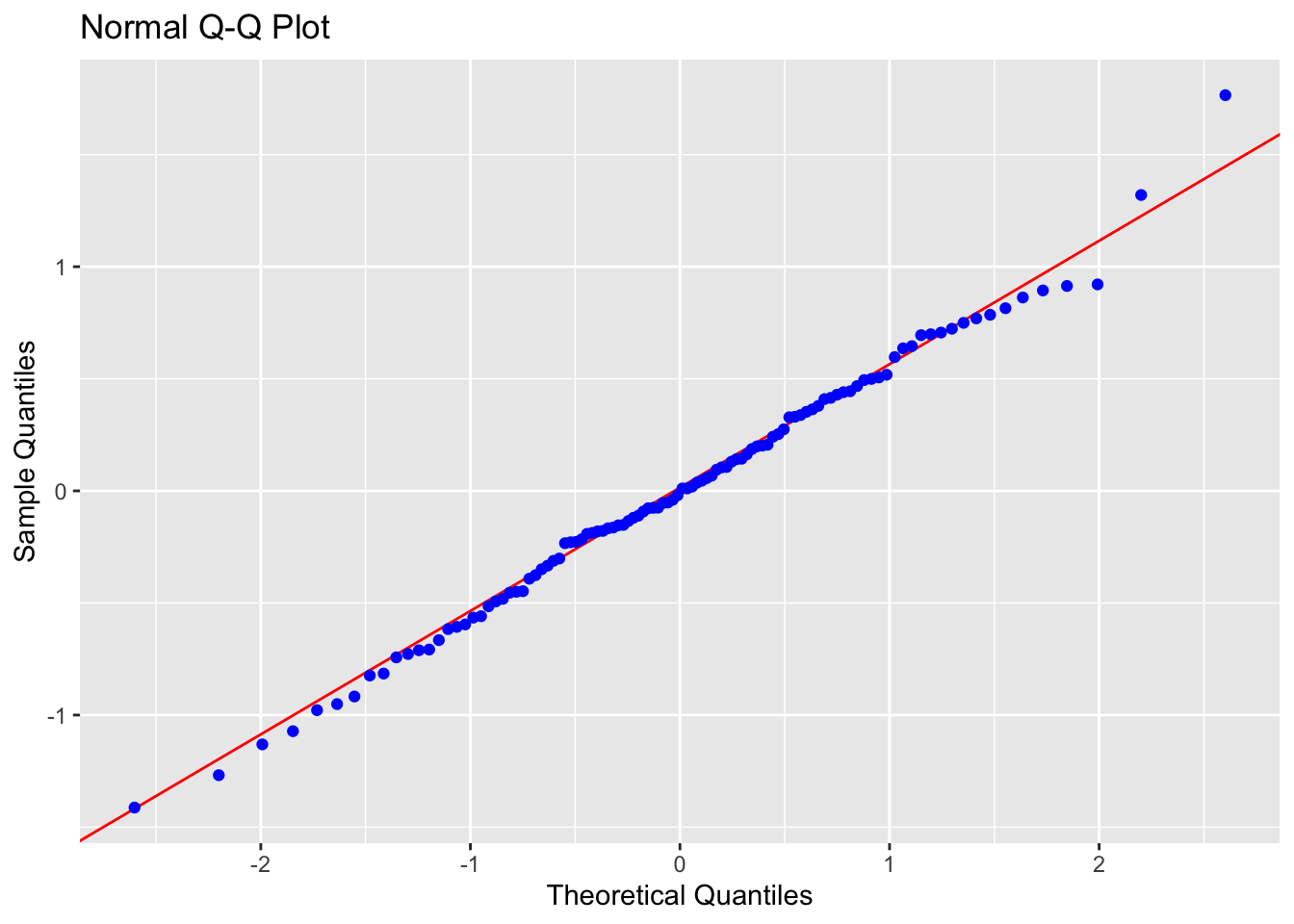

ols_plot_resid_qq(LM_Log_NumTheatres)

# Normality test

ols_test_normality(LM_Log_NumTheatres) ## -----------------------------------------------

## Test Statistic pvalue

## -----------------------------------------------

## Shapiro-Wilk 0.9944 0.9439

## Kolmogorov-Smirnov 0.0524 0.9283

## Cramer-von Mises 11.4842 0.0000

## Anderson-Darling 0.1465 0.9661

## -----------------------------------------------

# F test for variance

ols_test_f(LM_Log_NumTheatres) ##

## F Test for Heteroskedasticity

## -----------------------------

## Ho: Variance is homogenous

## Ha: Variance is not homogenous

##

## Variables: fitted values of Log_DomesticGross

##

## Test Summary

## -------------------------

## Num DF = 1

## Den DF = 106

## F = 2.734183

## Prob > F = 0.1011804-

Linearity: PASS - No evidence a curve would fit the mean of y|x better than a line

-

Independence: PASS - Hard to assess, but no huge issues I can see

-

Normality: PASS - the QQplot looks straight and according to several normality significance tests, there is almost a 95% probability of seeing a result like this if it was sampled from an underlying population which had normal residuals.

- Equal variance: PASS (just) - The residual plot looks relatively random and according there is a 10% chance of seeing a result like this if it was sampled from a population with equal variances in residuals.

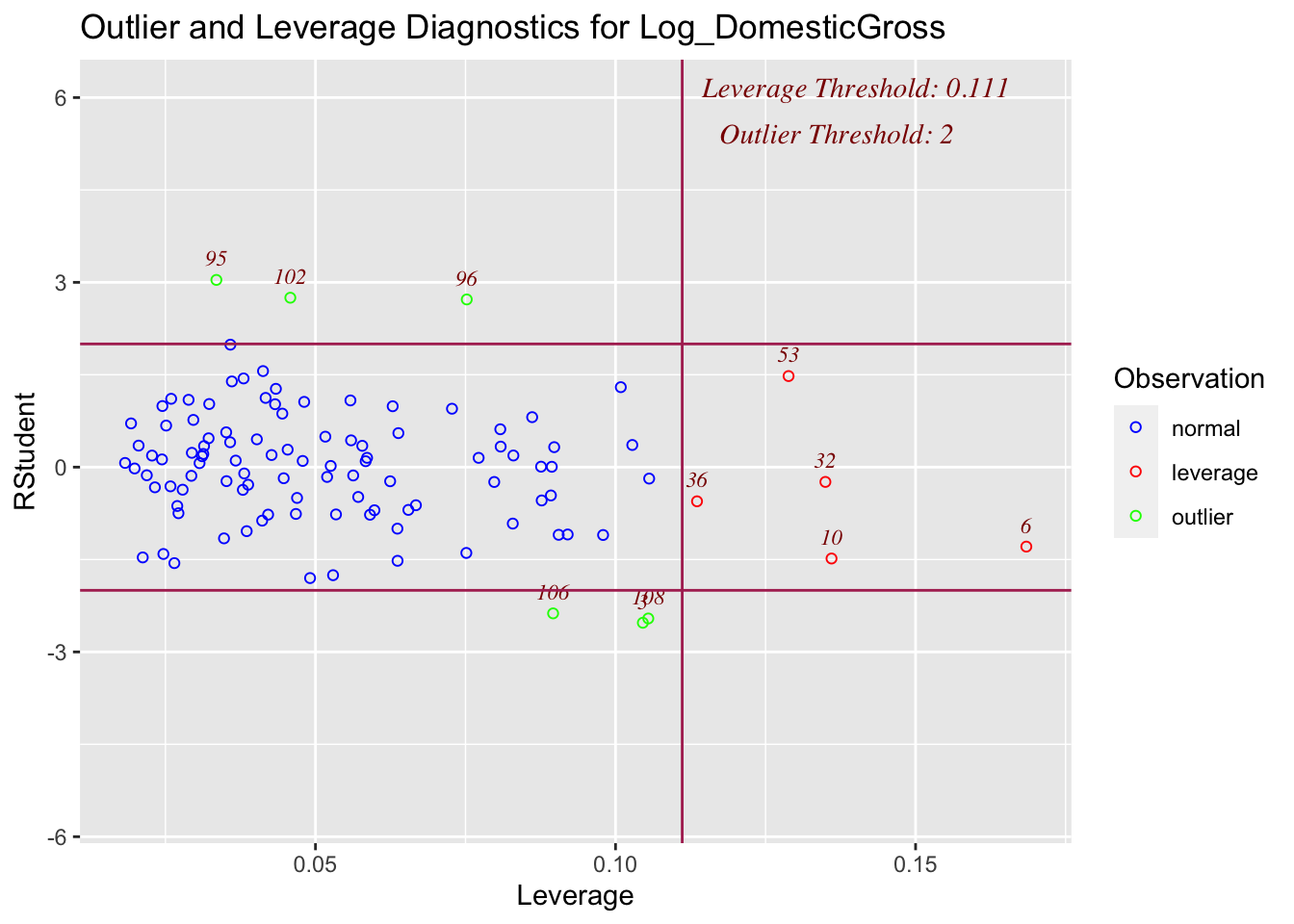

Check for Influential Outliers

Now that model 1B is valid in that it is satisfying the LINE conditions), I will make one final check for influential outliers, as shown below.

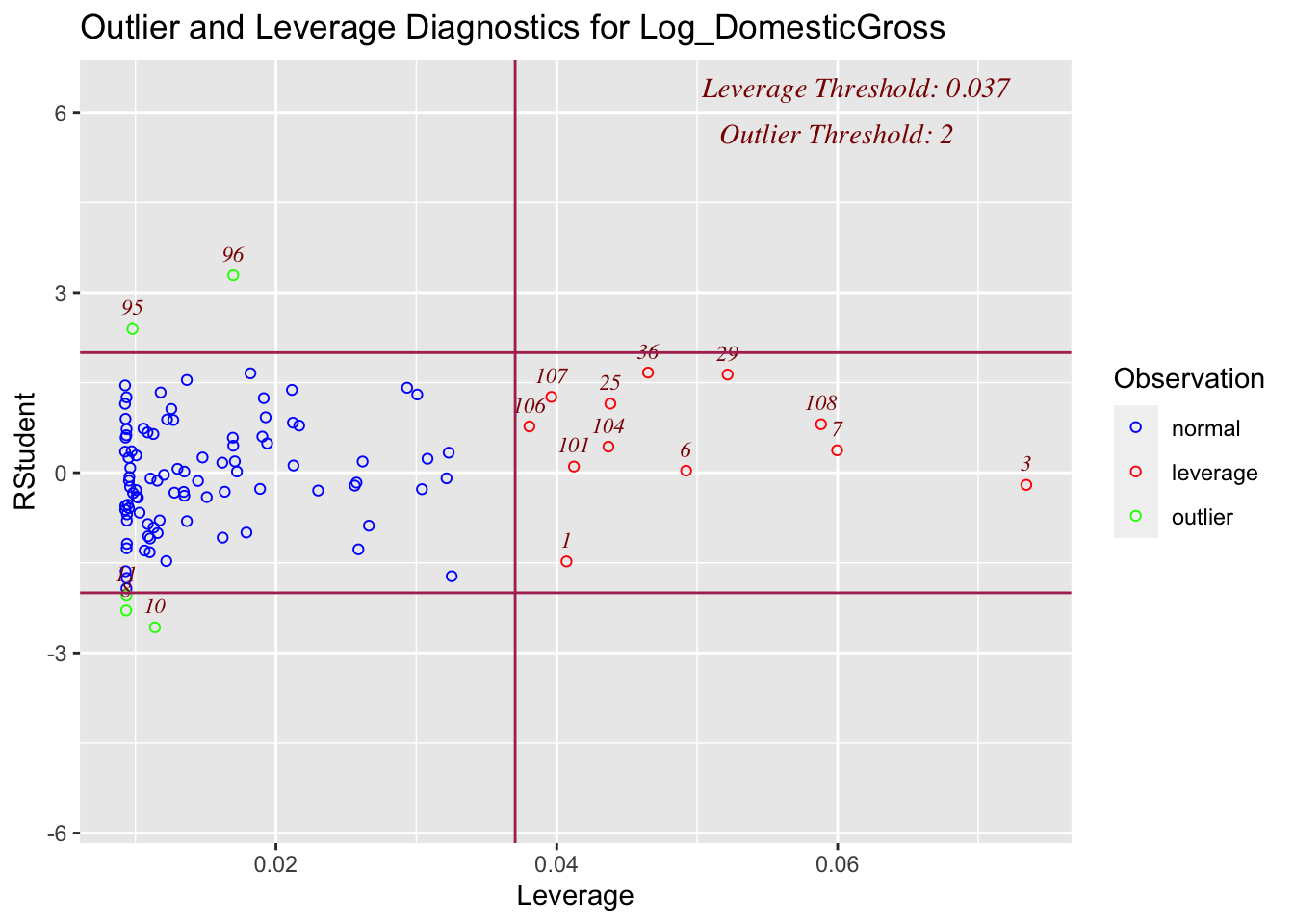

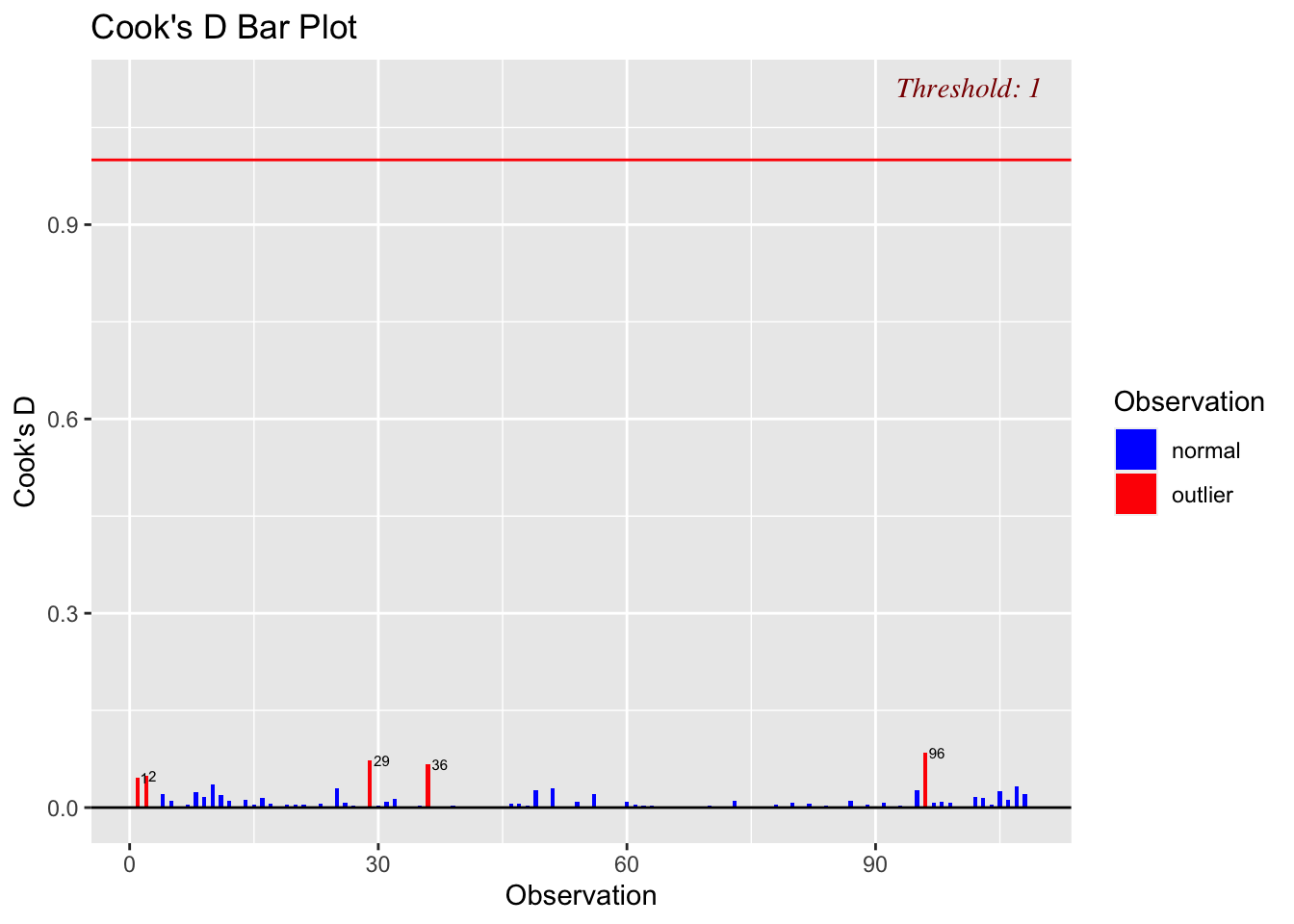

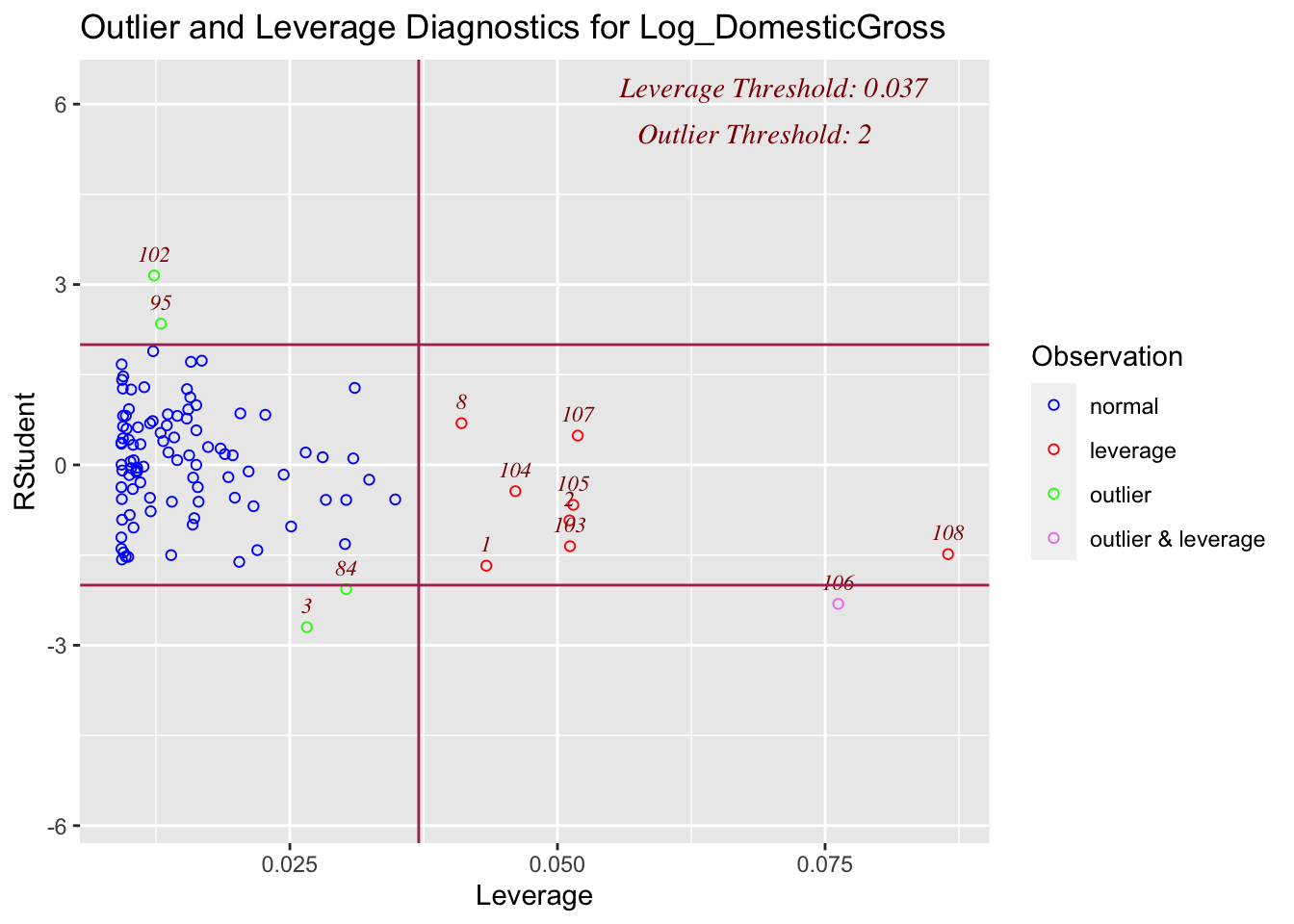

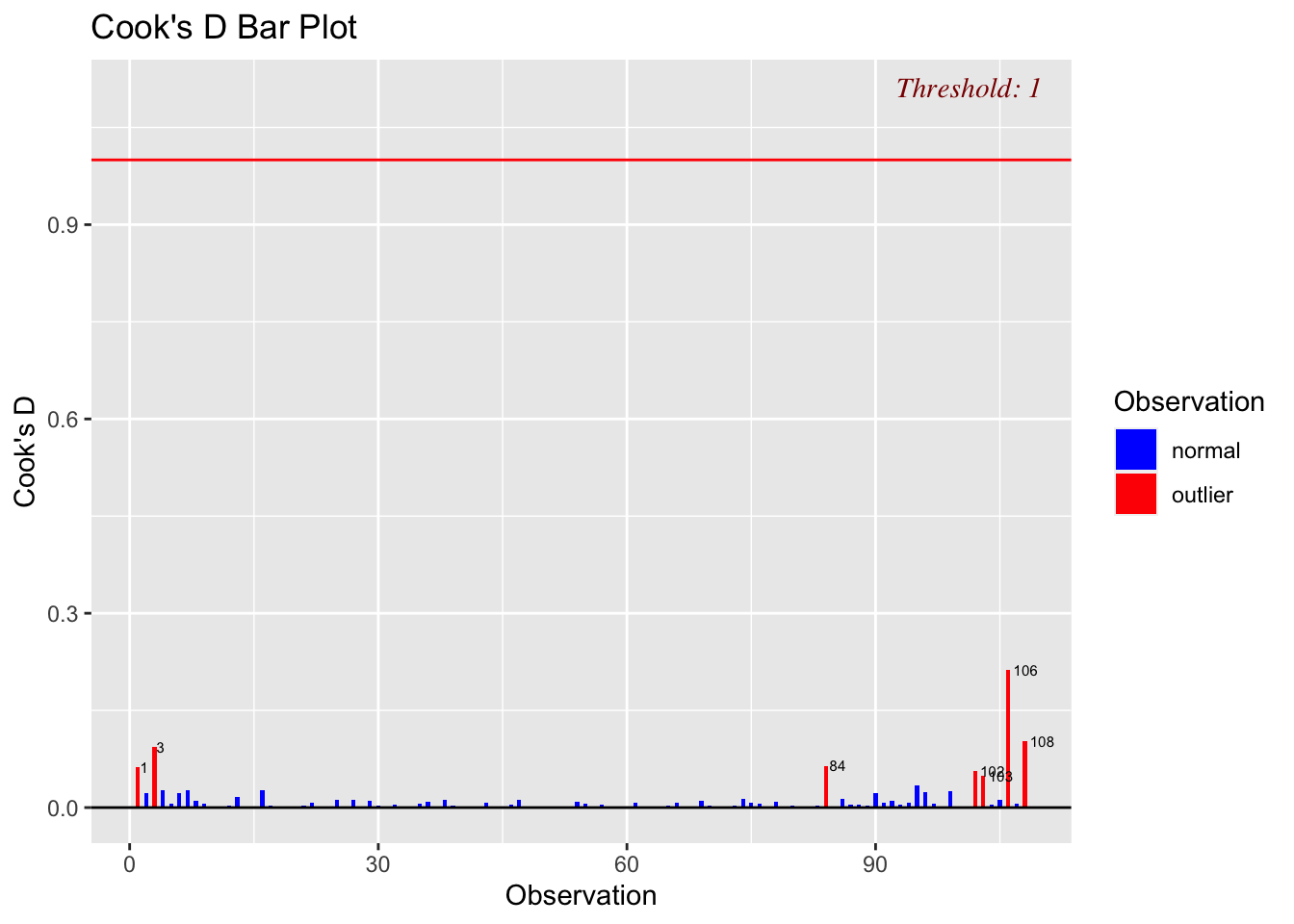

ols_plot_resid_lev(LM_Log_NumTheatres)

ols_plot_cooksd_bar(LM_Log_NumTheatres,threshold=1)

Although there are high leverage points (unusually far from the mean of x in the x direction), and some outliers, none of the outliers are influential, suggesting we can move on to model 2.

Model 2: BOAverageOpenWeek

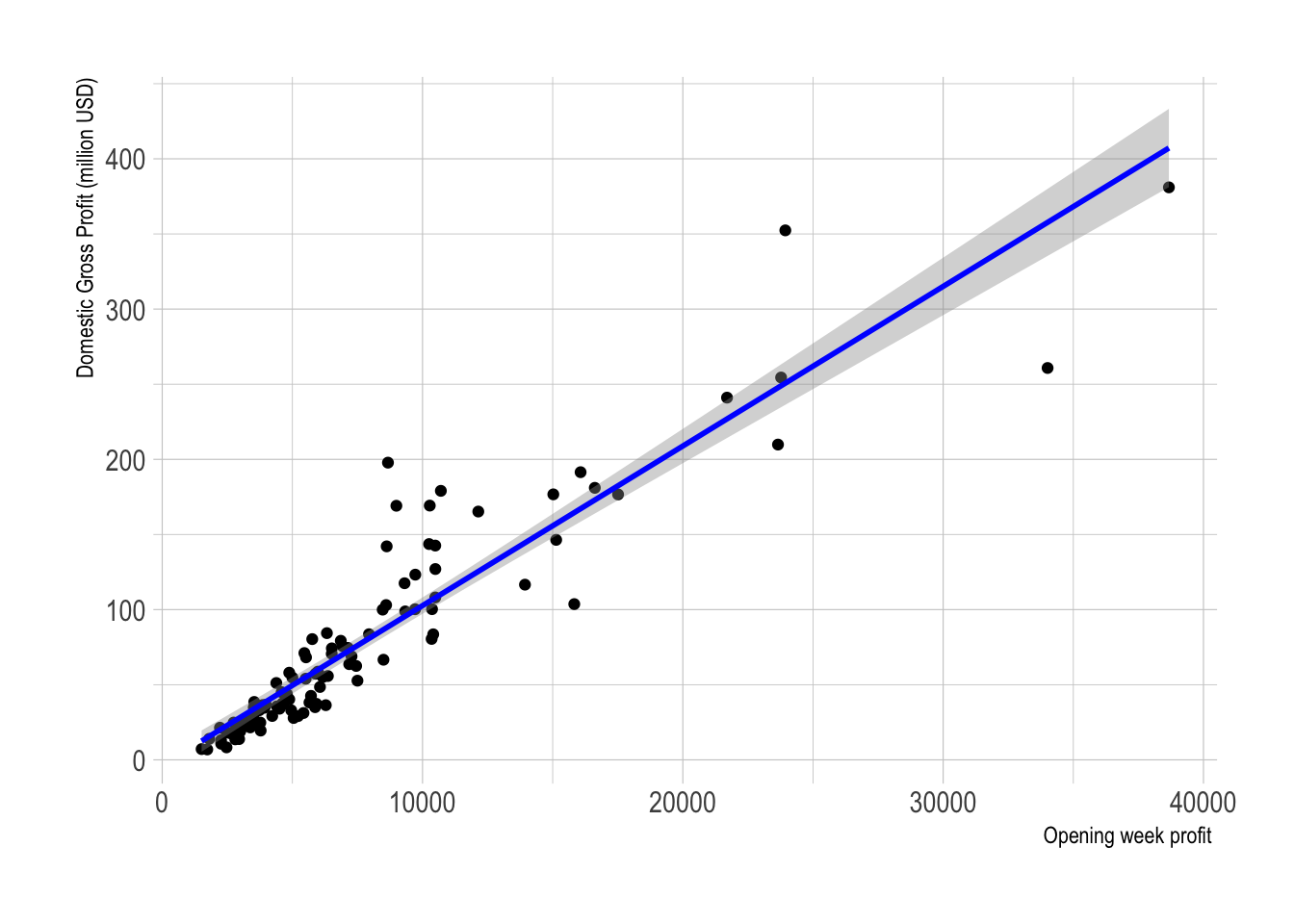

My second model assesses if Domestic Gross (millions of dollars) can be modelled using the Average box office revenue per theater opening weekend (in dollars), variable BOAverageOpenWeek.

# Scatterplot

ggplot(movies, aes(x=BOAverageOpenWeek, y=DomesticGross)) +

geom_point() +

xlab("Opening week profit ") + ylab("Domestic Gross Profit (million USD)")+

geom_smooth(method=lm , color="blue", se=TRUE) +

theme_ipsum()

LM_BOAvOpen <- lm(DomesticGross ~ BOAverageOpenWeek, data = movies)

LM_BOAvOpen##

## Call:

## lm(formula = DomesticGross ~ BOAverageOpenWeek, data = movies)

##

## Coefficients:

## (Intercept) BOAverageOpenWeek

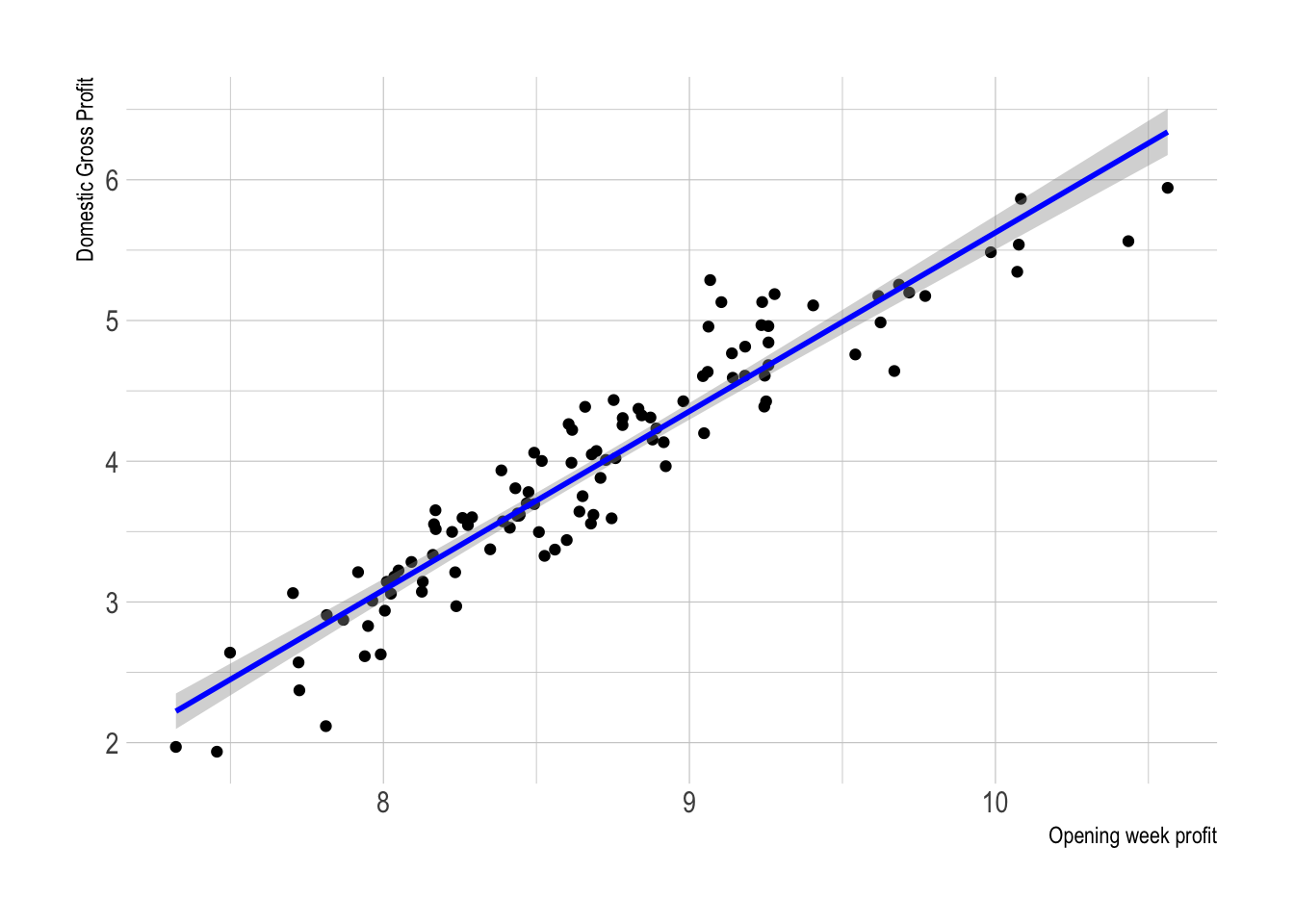

## -3.56700 0.01062In this case, as shown in the scatterplot above, our model suggests that for this sample:

\[ \widehat{DomesticGross} = -3.56700 + 0.01062 (BO_AverageOpeningWeek) \]

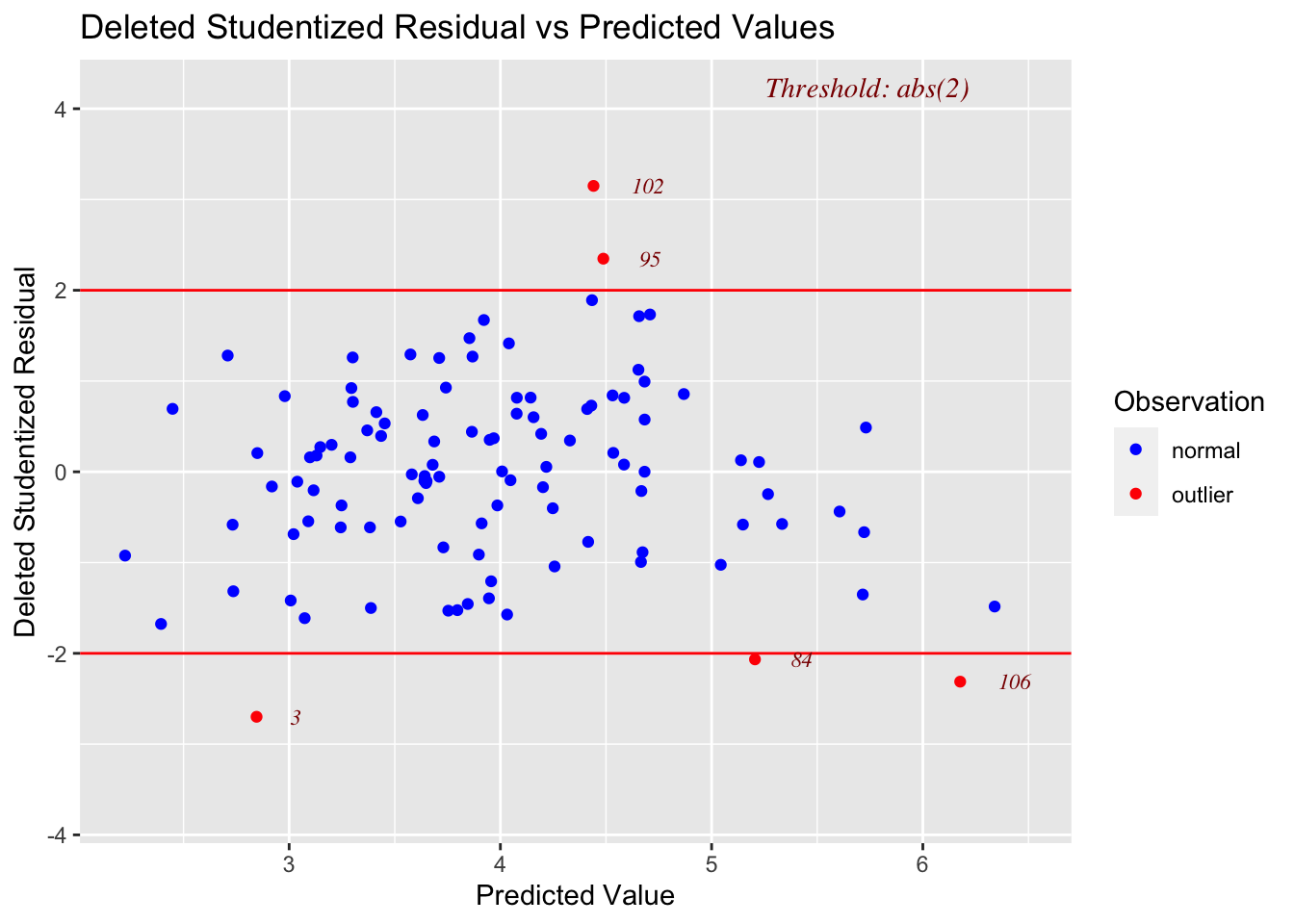

LINE assumptions check

# Model Diagnostics

ols_plot_resid_stud_fit(LM_BOAvOpen)

-

Linearity: PASS - No evidence a curve would fit the mean of y|x better than a line

-

Equal variance: FAIL - The residual plot shows significant “fanning out” (e.g. the points are close to the line for low predicted values and far from the line for higher ones).

This makes physical sense; there is probably a baseline ticket price for cinemas to break even, so for low profits, there’s not much room for variability. But for a very profitable movie or blockbuster, many different types of cinema are showing the film, with different levels of luxury.

Model 2B, Log-Log transformation

Again, we will try a transformation to fix line - after exploring the issue using the code above, it seems that this model needs a log-log transformation (e.g. both the response and predictor are log transformed).

# Log response transformation

movies$Log_DomesticGross <- log(movies$DomesticGross)

movies$Log_BOAverageOpenWeek <- log(movies$BOAverageOpenWeek)

# Scatterplot

ggplot(movies, aes(x=Log_BOAverageOpenWeek, y=Log_DomesticGross)) +

geom_point() +

xlab("Opening week profit") + ylab("Domestic Gross Profit")+

geom_smooth(method=lm , color="blue", se=TRUE) +

theme_ipsum()

LM_BOAvOpen_loglog <- lm(Log_DomesticGross ~ Log_BOAverageOpenWeek, data = movies)

ols_plot_resid_stud_fit(LM_BOAvOpen_loglog)

LM_BOAvOpen_loglog##

## Call:

## lm(formula = Log_DomesticGross ~ Log_BOAverageOpenWeek, data = movies)

##

## Coefficients:

## (Intercept) Log_BOAverageOpenWeek

## -7.077 1.270Our model is now:

\[ \widehat{\ln(DomesticGross)} = -7.077 + 1.270(\ln(BO_AverageOpeningWeek)) \]

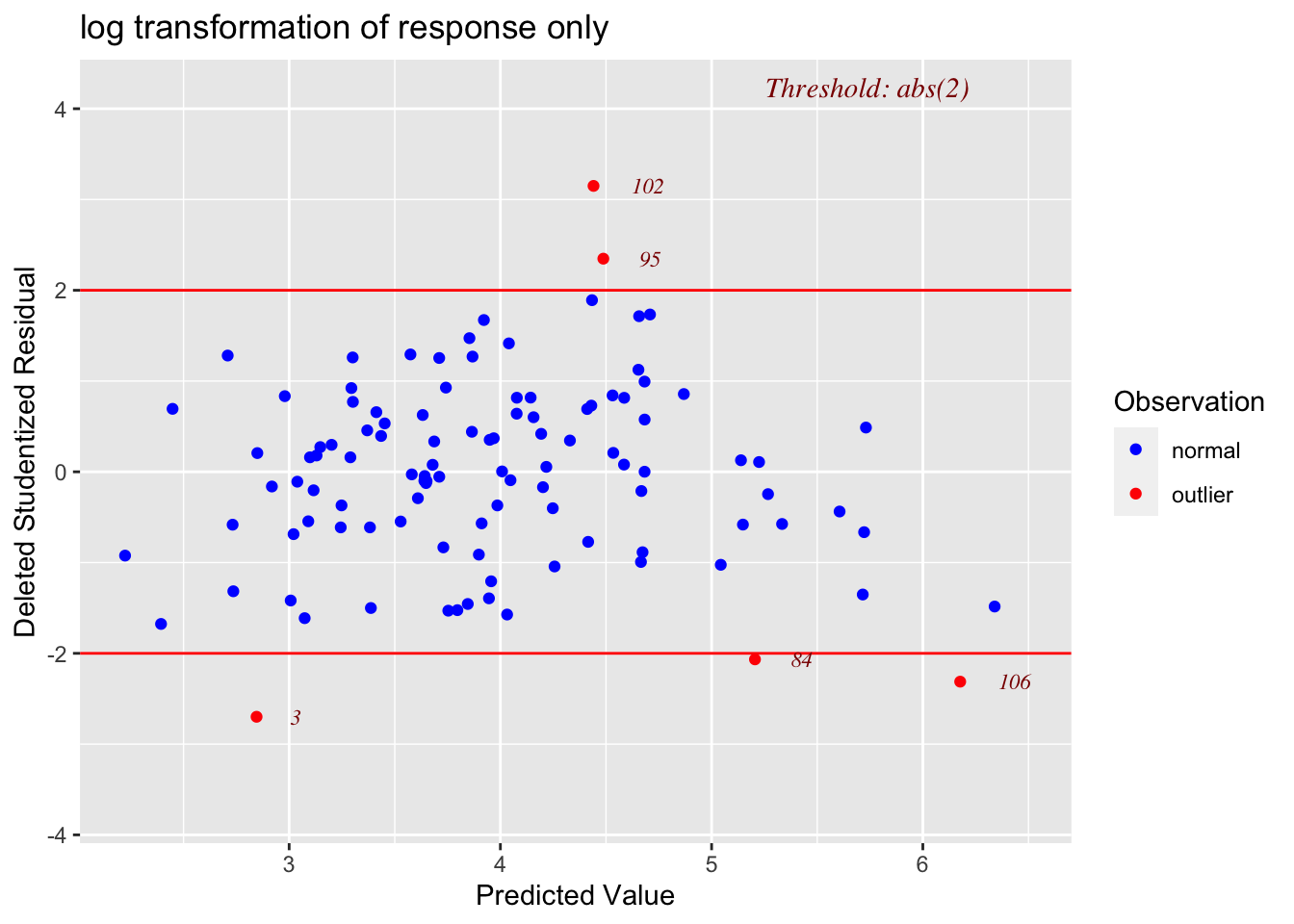

LINE assessment

residplot1 <- ols_plot_resid_stud_fit(LM_BOAvOpen_loglog,print_plot=FALSE)

residplot1$plot + ggtitle("log transformation of response only")

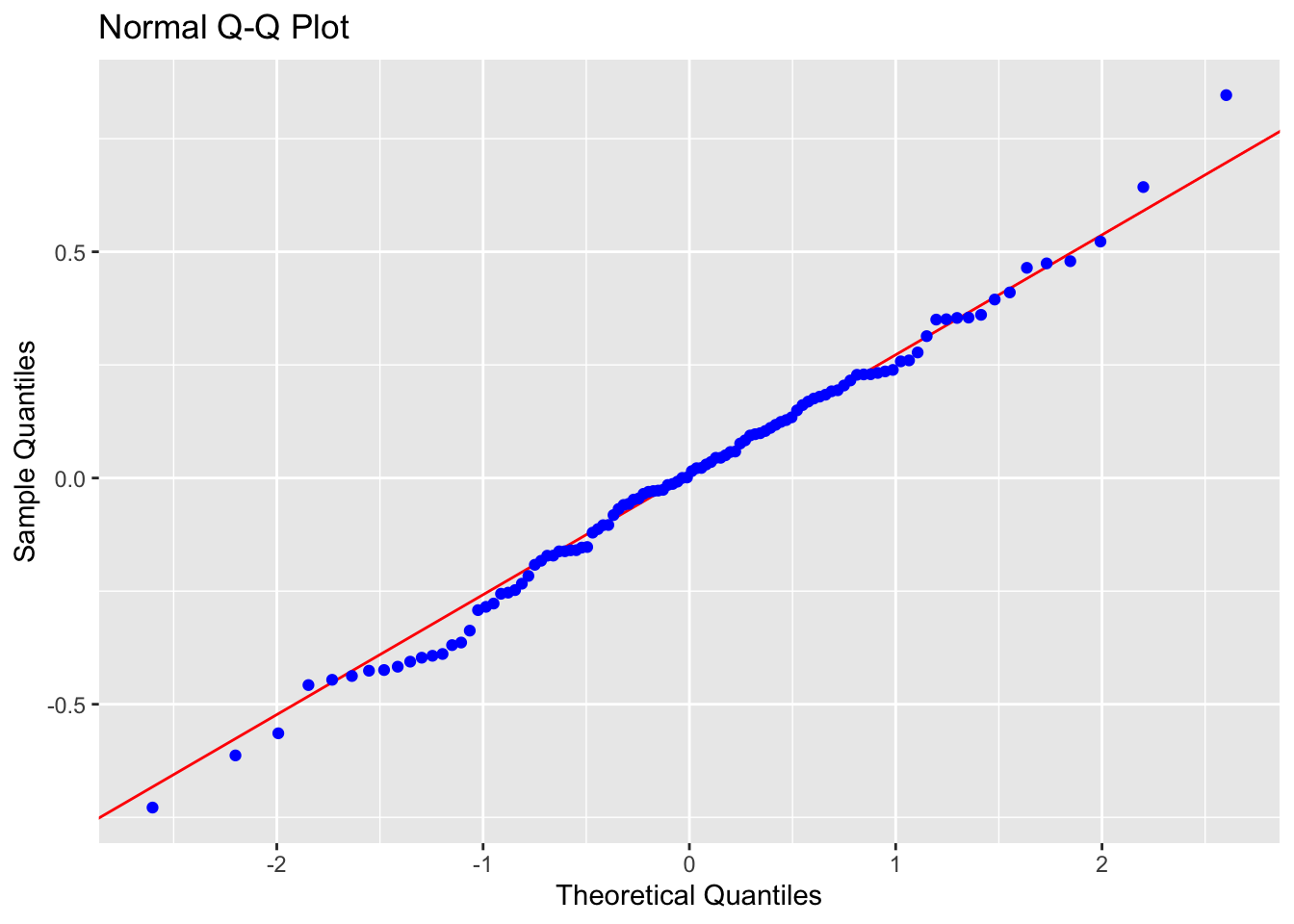

ols_plot_resid_qq(LM_BOAvOpen_loglog)

# Normality test

ols_test_normality(LM_BOAvOpen_loglog) ## -----------------------------------------------

## Test Statistic pvalue

## -----------------------------------------------

## Shapiro-Wilk 0.9941 0.9286

## Kolmogorov-Smirnov 0.0456 0.9781

## Cramer-von Mises 19.907 0.0000

## Anderson-Darling 0.231 0.7992

## -----------------------------------------------

# F test for variance

ols_test_f(LM_BOAvOpen_loglog) ##

## F Test for Heteroskedasticity

## -----------------------------

## Ho: Variance is homogenous

## Ha: Variance is not homogenous

##

## Variables: fitted values of Log_DomesticGross

##

## Test Summary

## -------------------------

## Num DF = 1

## Den DF = 106

## F = 1.222969

## Prob > F = 0.2712824-

Linearity: PASS - No evidence a curve would fit the mean of y|x better than a line

-

Independence: PASS - Hard to assess, but no huge issues I can see

-

Normality: PASS - the QQplot looks straight and according to several normality significance tests, there is over an 80% probability of seeing a result like this if our sample was taken from an underlying population which had normal residuals.

- Equal variance: PASS - The residual plot looks relatively random and according there is a 27% chance of seeing a result like this if it was sampled from a population with equal variances in residuals

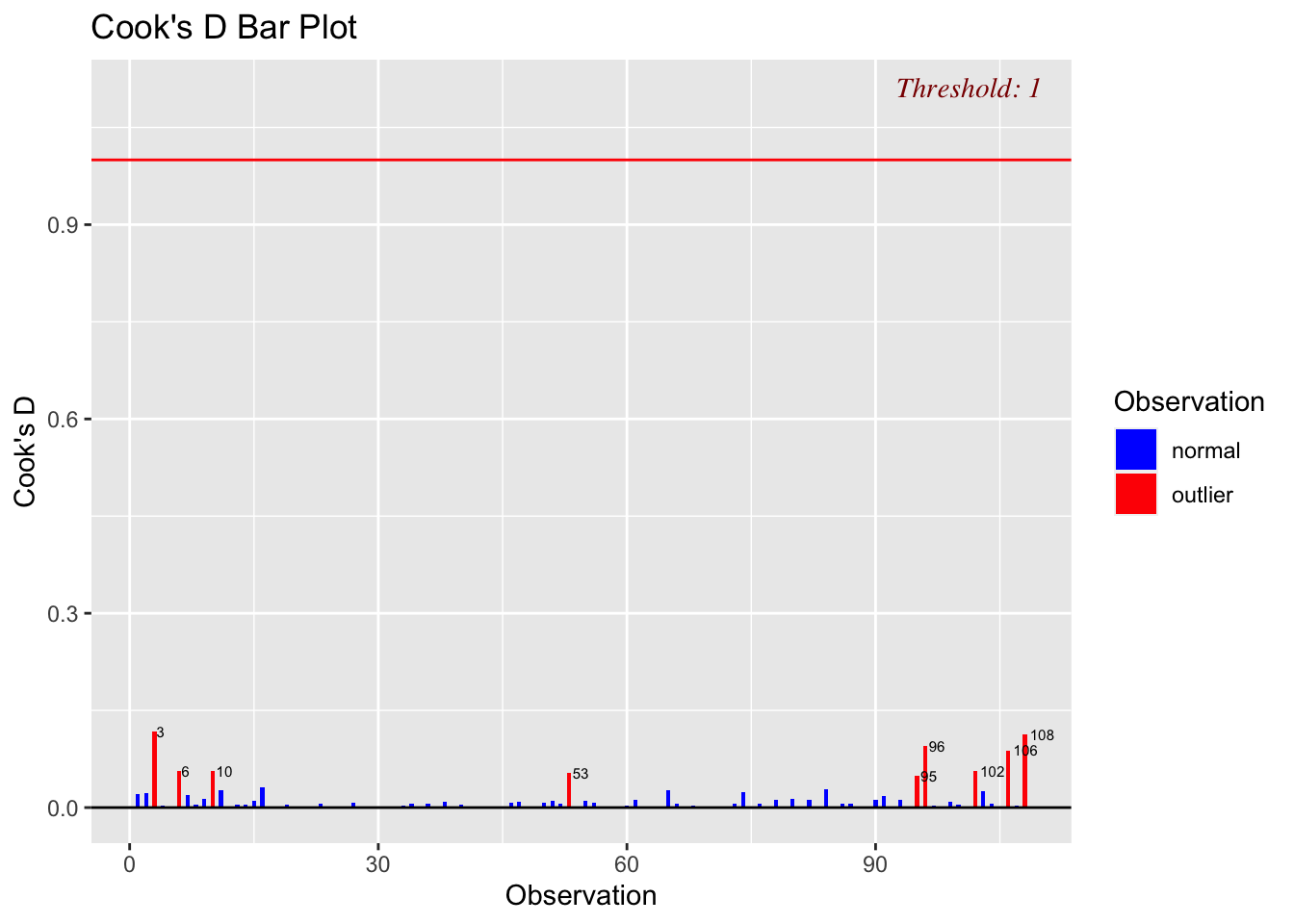

Check for Influential Outliers

Now that model 1B is valid in that it is satisfying the LINE conditions), I will make one final check for influential outliers, as shown below.

ols_plot_resid_lev(LM_BOAvOpen_loglog)

ols_plot_cooksd_bar(LM_BOAvOpen_loglog,threshold=1)

Although there are high leverage points (unusually far from the mean of x in the x direction), and some outliers, none of the outliers are influential, suggesting we can move on to model 2.

Model comparison

We now have two valid models predicting the domestic gross product (LM_Log_NumTheatres and LM_BOAvOpen_loglog) where:

Model 1:

\[ \widehat{\ln(DomesticGross)} = 0.430433 + 0.001158(TheatersOpenWeek) \]

Model 2:

\[ \widehat{\ln(DomesticGross)} = -7.077 + 1.270(\ln(BOAverageOpeningWeek)) \]

Where, DomesticGross is the Gross revenue in the US by the end of 2011, in millions of dollars; TheatersOpenWeek is the number of cinemas showing the movie on opening weekend; and BOAverageOpeningWeek is the average box office revenue per theater opening weekend (in dollars).

The model summaries for each model can be seen as follows:

ols_regress(LM_Log_NumTheatres)## Model Summary

## ---------------------------------------------------------------

## R 0.763 RMSE 0.561

## R-Squared 0.581 MSE 0.321

## Adj. R-Squared 0.577 Coef. Var 14.267

## Pred R-Squared 0.566 AIC 187.629

## MAE 0.443 SBC 195.675

## ---------------------------------------------------------------

## RMSE: Root Mean Square Error

## MSE: Mean Square Error

## MAE: Mean Absolute Error

## AIC: Akaike Information Criteria

## SBC: Schwarz Bayesian Criteria

##

## ANOVA

## ---------------------------------------------------------------------

## Sum of

## Squares DF Mean Square F Sig.

## ---------------------------------------------------------------------

## Regression 47.209 1 47.209 147.234 0.0000

## Residual 33.988 106 0.321

## Total 81.197 107

## ---------------------------------------------------------------------

##

## Parameter Estimates

## -------------------------------------------------------------------------------------------

## model Beta Std. Error Std. Beta t Sig lower upper

## -------------------------------------------------------------------------------------------

## (Intercept) 0.430 0.297 1.451 0.150 -0.158 1.019

## TheatersOpenWeek 0.001 0.000 0.763 12.134 0.000 0.001 0.001

## -------------------------------------------------------------------------------------------and

ols_regress(LM_BOAvOpen_loglog)## Model Summary

## --------------------------------------------------------------

## R 0.947 RMSE 0.279

## R-Squared 0.897 MSE 0.079

## Adj. R-Squared 0.896 Coef. Var 7.093

## Pred R-Squared 0.892 AIC 36.664

## MAE 0.220 SBC 44.710

## --------------------------------------------------------------

## RMSE: Root Mean Square Error

## MSE: Mean Square Error

## MAE: Mean Absolute Error

## AIC: Akaike Information Criteria

## SBC: Schwarz Bayesian Criteria

##

## ANOVA

## ---------------------------------------------------------------------

## Sum of

## Squares DF Mean Square F Sig.

## ---------------------------------------------------------------------

## Regression 72.797 1 72.797 918.681 0.0000

## Residual 8.400 106 0.079

## Total 81.197 107

## ---------------------------------------------------------------------

##

## Parameter Estimates

## ---------------------------------------------------------------------------------------------------

## model Beta Std. Error Std. Beta t Sig lower upper

## ---------------------------------------------------------------------------------------------------

## (Intercept) -7.077 0.365 -19.366 0.000 -7.801 -6.352

## Log_BOAverageOpenWeek 1.270 0.042 0.947 30.310 0.000 1.187 1.353

## ---------------------------------------------------------------------------------------------------The studio asked us to compare the models against several metrics:

-

\(R^2\) - How much variation is explained by the model:

- Model LM_Log_NumTheatres: \(R^2\) = 0.581.

- Model LM_BOAvOpen_loglog: \(R^2\) = 0.897

- So the second model performs better in terms of R2; AKA the average box office revenue per theater opening weekend (in dollars) is able to explain nearly 90% of the variation in \(\ln(DomesticGross)\) in our sample

\(MSE\) - The mean squared error - Model LM_Log_NumTheatres: MSE = 0.321 - Model LM_BOAvOpen_loglog: MSE = 0.079 - So the second model performs better in terms of the mean squared error, as it has smaller errors than the first (possible as both models have a response variable in the same units)

-

LINE Assumptions/residuals - Is the model valid

- We spent some time finding transformations that make the model valid in both cases.

- Each model performs equally well using this metric

AIC - Aikikes Information Criterion - This is a modification to the sum of squares error which takes into account the number of predictors (p) and the number of objects (n).

\[ n\ln{SSE}-n\ln{n}+2p \]

Given that in both our cases n=108 and p=1, it would stand to reason that this result would reflect the SSE.

AIC(LM_Log_NumTheatres,LM_BOAvOpen_loglog)## df AIC

## LM_Log_NumTheatres 3 187.62881

## LM_BOAvOpen_loglog 3 36.66406So this metric also suggests that the second model is better, as it has a lower value of AIC.

Multiple regression

Best Subsets model selection

Finally, we move onto the use of multiple predictors, specifically using the stepwise best subsets model selection approach. Given that both of my individual models use \(\ln(DomesticGross)\), I will also use this as my response variable here.

# apply a model to all the variables

fullmodel <- lm(Log_DomesticGross ~ RottenTomatoes+ AudienceScore+TheatersOpenWeek+BOAverageOpenWeek+Profitability, data=movies)

# Now run the stepwise regression

ols_step_best_subset(fullmodel)## Best Subsets Regression

## --------------------------------------------------------------------------------------------

## Model Index Predictors

## --------------------------------------------------------------------------------------------

## 1 BOAverageOpenWeek

## 2 TheatersOpenWeek BOAverageOpenWeek

## 3 TheatersOpenWeek BOAverageOpenWeek Profitability

## 4 AudienceScore TheatersOpenWeek BOAverageOpenWeek Profitability

## 5 RottenTomatoes AudienceScore TheatersOpenWeek BOAverageOpenWeek Profitability

## --------------------------------------------------------------------------------------------

##

## Subsets Regression Summary

## -----------------------------------------------------------------------------------------------------------------------------------

## Adj. Pred

## Model R-Square R-Square R-Square C(p) AIC SBIC SBC MSEP FPE HSP APC

## -----------------------------------------------------------------------------------------------------------------------------------

## 1 0.6504 0.6471 0.6078 108.5112 168.1788 -140.7628 176.2252 28.9221 0.2728 0.0026 0.3628

## 2 0.7455 0.7406 0.7121 52.7197 135.9018 -172.5831 146.6303 21.2593 0.2023 0.0019 0.2691

## 3 0.8006 0.7928 0.7669 23.2289 113.5568 -195.8307 129.6496 16.8192 0.1630 0.0015 0.2148

## 4 0.8338 0.8256 0.7995 5.0407 95.8839 -211.9276 114.6588 14.1558 0.1384 0.0013 0.1824

## 5 0.8338 0.8240 0.7948 7.0000 97.8403 -209.8283 119.2974 14.2901 0.1410 0.0013 0.1857

## -----------------------------------------------------------------------------------------------------------------------------------

## AIC: Akaike Information Criteria

## SBIC: Sawa's Bayesian Information Criteria

## SBC: Schwarz Bayesian Criteria

## MSEP: Estimated error of prediction, assuming multivariate normality

## FPE: Final Prediction Error

## HSP: Hocking's Sp

## APC: Amemiya Prediction CriteriaI am also going to try one with Log_BOAverageOpenWeek, as that seemed to improve my individual model skill

# apply a model to all the variables

fullmodel.log <- lm(Log_DomesticGross ~ RottenTomatoes+ AudienceScore+TheatersOpenWeek+Log_BOAverageOpenWeek+Profitability, data=movies)

# Now run the stepwise regression

ols_step_best_subset(fullmodel.log)## Best Subsets Regression

## ------------------------------------------------------------------------------------------------

## Model Index Predictors

## ------------------------------------------------------------------------------------------------

## 1 Log_BOAverageOpenWeek

## 2 TheatersOpenWeek Log_BOAverageOpenWeek

## 3 AudienceScore TheatersOpenWeek Log_BOAverageOpenWeek

## 4 AudienceScore TheatersOpenWeek Log_BOAverageOpenWeek Profitability

## 5 RottenTomatoes AudienceScore TheatersOpenWeek Log_BOAverageOpenWeek Profitability

## ------------------------------------------------------------------------------------------------

##

## Subsets Regression Summary

## ------------------------------------------------------------------------------------------------------------------------------

## Adj. Pred

## Model R-Square R-Square R-Square C(p) AIC SBIC SBC MSEP FPE HSP APC

## ------------------------------------------------------------------------------------------------------------------------------

## 1 0.8966 0.8956 0.8918 59.2926 36.6641 -271.4104 44.7105 8.5581 0.0807 8e-04 0.1074

## 2 0.9145 0.9129 0.9086 32.9461 18.0718 -289.6967 28.8004 7.1405 0.0679 6e-04 0.0904

## 3 0.9301 0.9281 0.9232 10.3262 -1.6830 -308.3433 11.7276 5.8944 0.0566 5e-04 0.0753

## 4 0.9357 0.9325 0.9267 5.5001 -6.6882 -314.5467 12.0867 5.4760 0.0536 5e-04 0.0705

## 5 0.9360 0.9322 0.9258 7.0000 -5.2217 -312.8903 16.2354 5.5030 0.0543 5e-04 0.0715

## ------------------------------------------------------------------------------------------------------------------------------

## AIC: Akaike Information Criteria

## SBIC: Sawa's Bayesian Information Criteria

## SBC: Schwarz Bayesian Criteria

## MSEP: Estimated error of prediction, assuming multivariate normality

## FPE: Final Prediction Error

## HSP: Hocking's Sp

## APC: Amemiya Prediction CriteriaOut of all the tables above, it seems that first, by using Log_BOAverageOpenWeek, we can improve the skill, and that also other variables do appear to be important.

Final model

The analysis above suggests that the following model has the highest adjusted \(R^2\) (0.9325) and the lowest AIC (-6.6882).

\[ \widehat{\ln(DomesticGross)} = \beta_0 + \beta_1(AudienceScore)+\beta_2(TheatersOpenWeek)+\beta_3(BOAverageOpenWeek)+\beta_4(Profitability) \]

finalmodel <- lm(Log_DomesticGross ~ AudienceScore+TheatersOpenWeek+Log_BOAverageOpenWeek+Profitability, data=movies)

finalmodel##

## Call:

## lm(formula = Log_DomesticGross ~ AudienceScore + TheatersOpenWeek +

## Log_BOAverageOpenWeek + Profitability, data = movies)

##

## Coefficients:

## (Intercept) AudienceScore TheatersOpenWeek

## -5.5944812 0.0066017 0.0003627

## Log_BOAverageOpenWeek ProfitabilityHigh ProfitabilityLoss

## 0.9244820 0.0910764 -0.1571944Therefore, we see the following relationships.

For movies that made a loss:

\[ \widehat{\ln(DomesticGross)} = -5.594 + 0.0066(AudienceScore)+0.00036(TheatersOpenWeek)+0.9244820(\ln(BOAverageOpenWeek))-0.1572 \]

which can be simplified to:

\[ \widehat{\ln(DomesticGross)} = -5.752 + 0.0066(AudienceScore)+0.00036(TheatersOpenWeek)+0.9244820(\ln(BOAverageOpenWeek)) \]

For movies with an average level of profit:

\[ \widehat{\ln(DomesticGross)} = -5.594 + 0.0066(AudienceScore)+0.00036(TheatersOpenWeek)+0.9244820(\ln(BOAverageOpenWeek))-0.1572 \]

and for movies that made a high level of profit

\[ \widehat{\ln(DomesticGross)} = -5.594 + 0.0066(AudienceScore)+0.00036(TheatersOpenWeek)+0.9244820(\ln(BOAverageOpenWeek))+0.091 \]

which can be simplified to

\[ \widehat{\ln(DomesticGross)} = -5.5034 + 0.0066(AudienceScore)+0.00036(TheatersOpenWeek)+0.9244820(\ln(BOAverageOpenWeek))+0.091 \]

LINE assessment

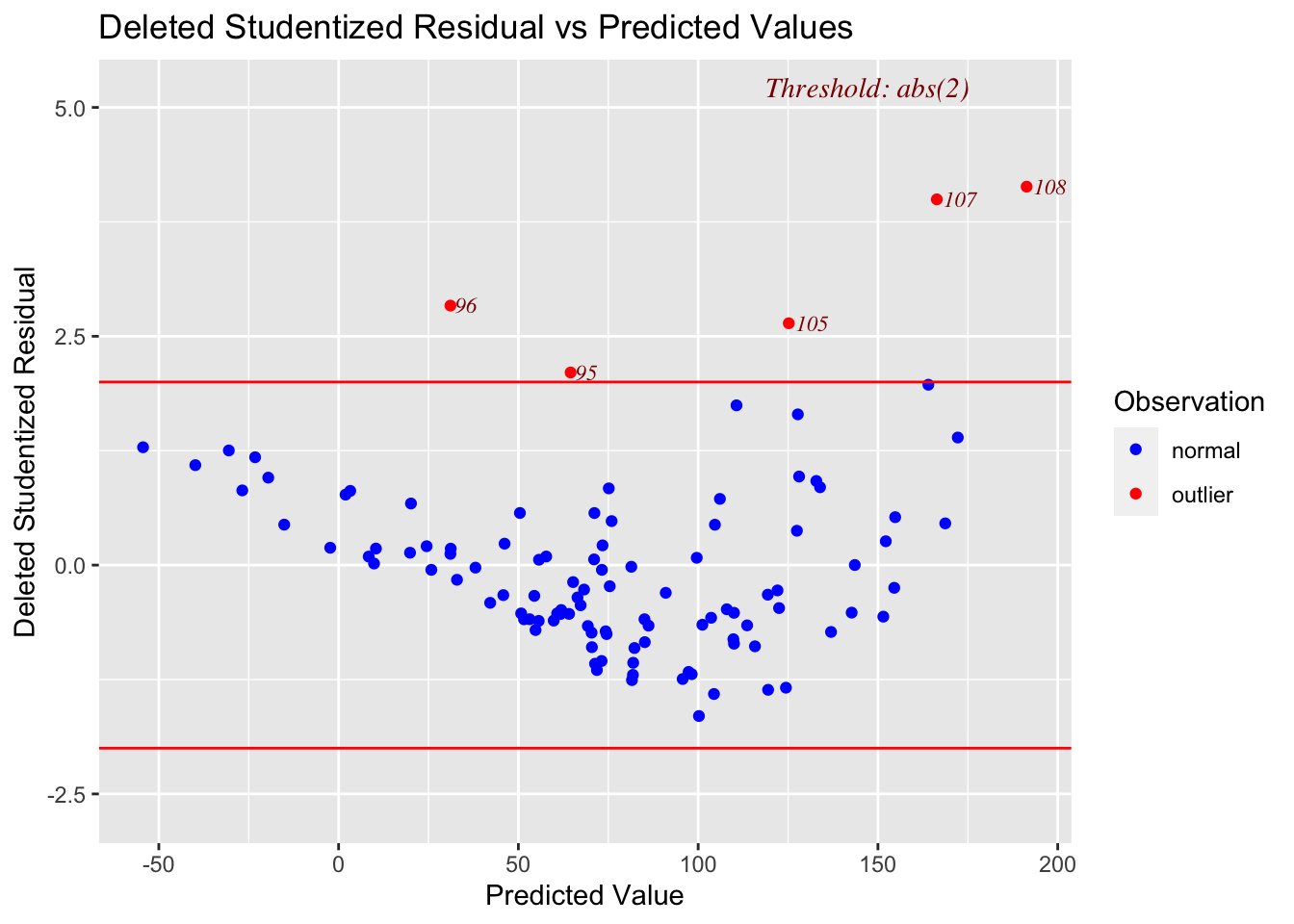

residplot1 <- ols_plot_resid_stud_fit(finalmodel)

ols_plot_resid_qq(finalmodel)

# Normality test

ols_test_normality(finalmodel) ## -----------------------------------------------

## Test Statistic pvalue

## -----------------------------------------------

## Shapiro-Wilk 0.9867 0.3652

## Kolmogorov-Smirnov 0.0555 0.8935

## Cramer-von Mises 22.9808 0.0000

## Anderson-Darling 0.3394 0.4935

## -----------------------------------------------

# F test for variance

ols_test_f(finalmodel) ##

## F Test for Heteroskedasticity

## -----------------------------

## Ho: Variance is homogenous

## Ha: Variance is not homogenous

##

## Variables: fitted values of Log_DomesticGross

##

## Test Summary

## --------------------------

## Num DF = 1

## Den DF = 106

## F = 5.367239

## Prob > F = 0.02244135-

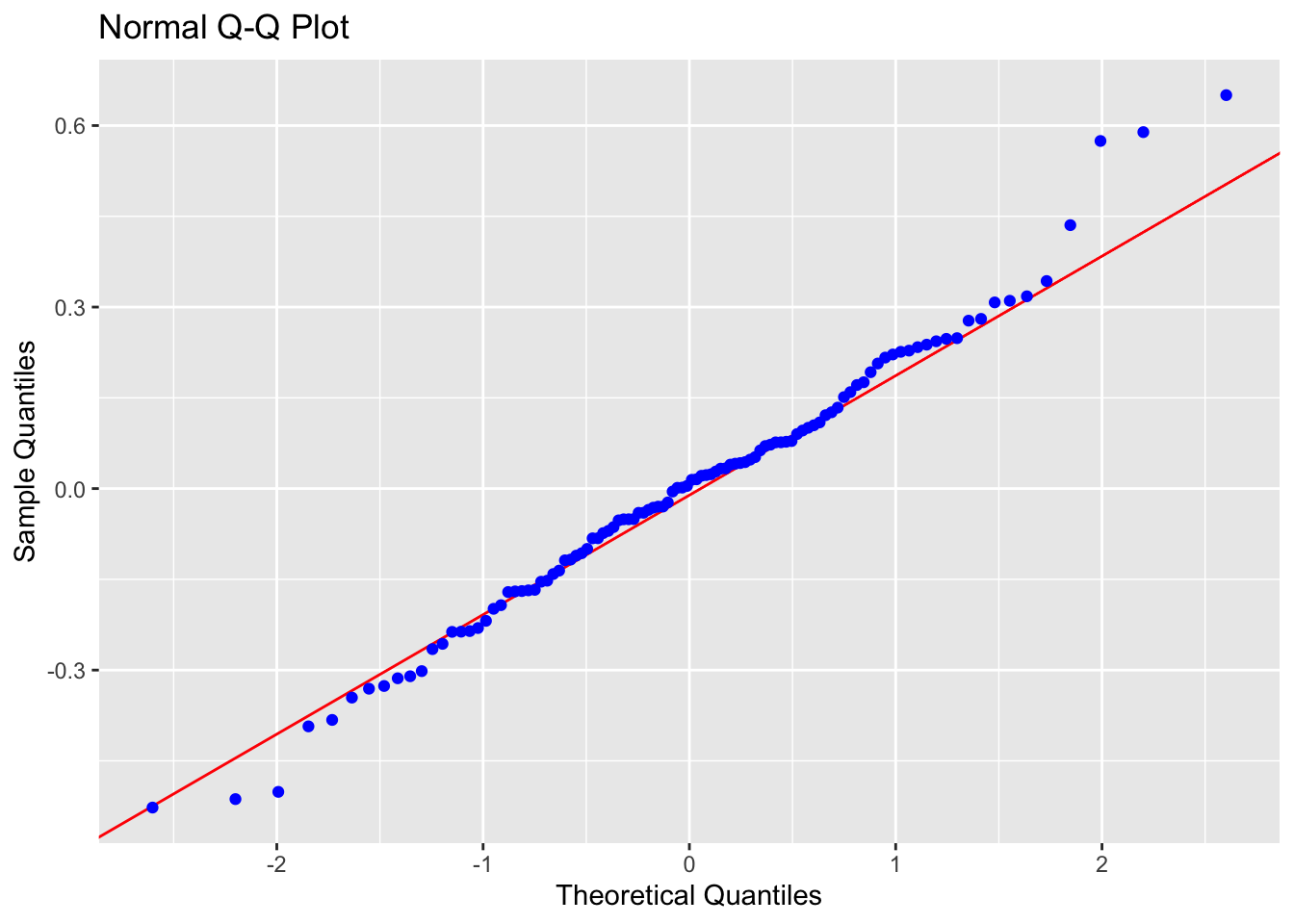

Linearity: PASS - No evidence a curve would fit the mean of y|x better than a line

-

Independence: PASS - Hard to assess, but no huge issues I can see

-

Normality: PASS, The QQ plot does show a few tiny issues around the tails, but the significance tests suggest that this could easily be explained by random chance

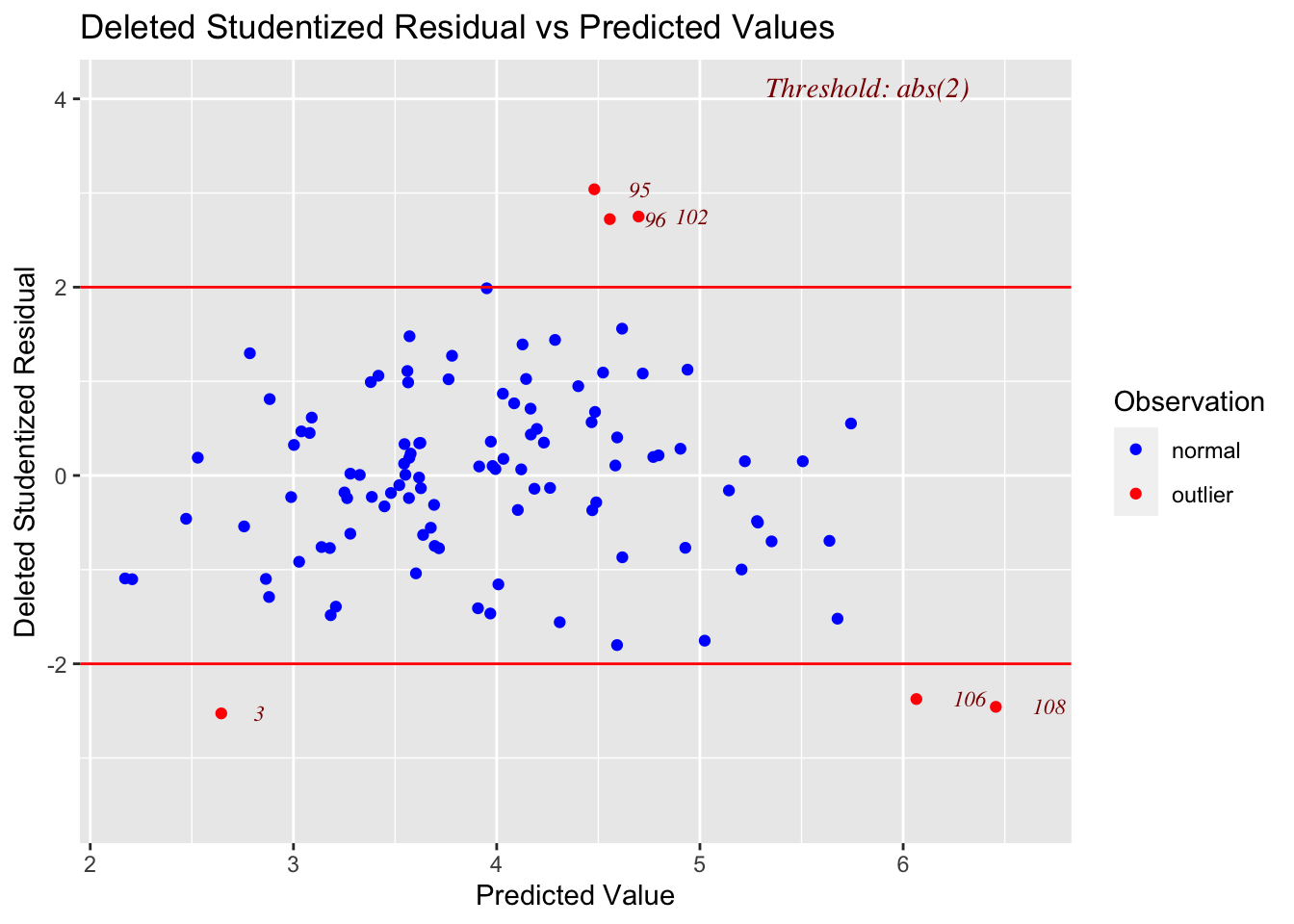

- Equal variance: PASS (just..)- The residual plot looks relatively random, but the F-test does suggest only a 2% chance of seeing a result like this if it was sampled from a population with equal variances in residuals. I wonder if this is due to the two potential outliers (line 108: (Harry Potter and the Deathly Hallows II) and line 106 (The Thing)).

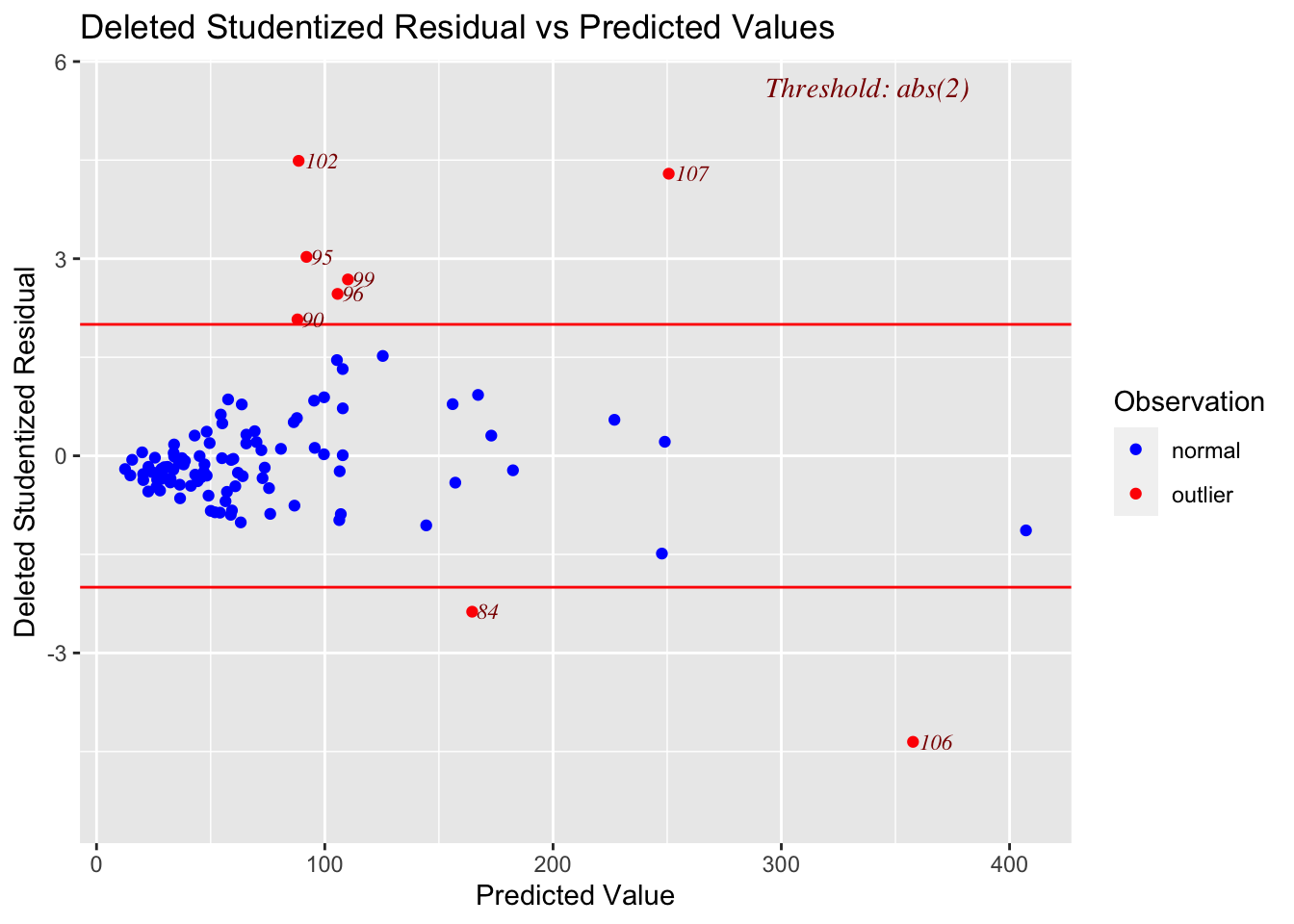

Check for Influential Outliers

Now that model 1B is valid in that it is satisfying the LINE conditions), I will make one final check for influential outliers, as shown below:

ols_plot_resid_lev(finalmodel)

ols_plot_cooksd_bar(finalmodel,threshold=1)

This shows that although there are several outliers and high leverage points, none are influential enough to especially capture the fit.

Studio questions

The studio had several questions about the new model, which I answer below:

1. Show your final model is more skillful than the other models you made

We can do this simply by using AIC, where the smallest value indicates the most skillful model (assuming LINE assumptions). Note, I only included models where the response was ln(DomesticGross), as this technique requires the response variable to be identical across the model comparisons. As you can see below, our final model has the lowest value of AIC.

AIC(finalmodel,fullmodel.log, fullmodel, LM_BOAvOpen_loglog, LM_Log_NumTheatres)## df AIC

## finalmodel 7 -6.688178

## fullmodel.log 8 -5.221659

## fullmodel 8 97.840334

## LM_BOAvOpen_loglog 3 36.664060

## LM_Log_NumTheatres 3 187.6288062. Conduct a partial F test to assess if your final model is significantly better than your best ‘single variable’ model

anova(finalmodel, LM_BOAvOpen_loglog)## Analysis of Variance Table

##

## Model 1: Log_DomesticGross ~ AudienceScore + TheatersOpenWeek + Log_BOAverageOpenWeek +

## Profitability

## Model 2: Log_DomesticGross ~ Log_BOAverageOpenWeek

## Res.Df RSS Df Sum of Sq F Pr(>F)

## 1 102 5.2210

## 2 106 8.3996 -4 -3.1785 15.524 5.97e-10 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1H0: The Reduced model is all we need (one predictor)

H1: The full model (finalmodel) expains so much more variability that this is appropriate to use

Test statistic: F-test: 15.524

P-value: very small

This suggests that the full model reduces the model error by such a large amount that we think it would be very unlikely to see this by chance. Therefore we have enough evidence to reject H0 and choose the full model.

3. In your final model, which predictors have the highest effect size and significance?

We can answer this by looking at the table of coefficients

ols_regress(finalmodel)## Model Summary

## --------------------------------------------------------------

## R 0.967 RMSE 0.220

## R-Squared 0.936 MSE 0.051

## Adj. R-Squared 0.933 Coef. Var 5.700

## Pred R-Squared 0.927 AIC -6.688

## MAE 0.169 SBC 12.087

## --------------------------------------------------------------

## RMSE: Root Mean Square Error

## MSE: Mean Square Error

## MAE: Mean Absolute Error

## AIC: Akaike Information Criteria

## SBC: Schwarz Bayesian Criteria

##

## ANOVA

## ---------------------------------------------------------------------

## Sum of

## Squares DF Mean Square F Sig.

## ---------------------------------------------------------------------

## Regression 75.976 5 15.195 296.858 0.0000

## Residual 5.221 102 0.051

## Total 81.197 107

## ---------------------------------------------------------------------

##

## Parameter Estimates

## ---------------------------------------------------------------------------------------------------

## model Beta Std. Error Std. Beta t Sig lower upper

## ---------------------------------------------------------------------------------------------------

## (Intercept) -5.594 0.372 -15.039 0.000 -6.332 -4.857

## AudienceScore 0.007 0.001 0.128 4.630 0.000 0.004 0.009

## TheatersOpenWeek 0.000 0.000 0.239 6.524 0.000 0.000 0.000

## Log_BOAverageOpenWeek 0.924 0.057 0.689 16.358 0.000 0.812 1.037

## ProfitabilityHigh 0.091 0.050 0.050 1.808 0.074 -0.009 0.191

## ProfitabilityLoss -0.157 0.075 -0.059 -2.106 0.038 -0.305 -0.009

## ---------------------------------------------------------------------------------------------------The variable with the largest effect size is Log_BOAverage_OpenWeek. If we increase that value by 1, then LogDomesticGross goes up by 0.924. Of course the fact that both are log transformed makes this harder to interpret.

All the variables appear to be significant predictors of gross domestic profit (p < 0.05), apart from “ProfitabilityHigh”. So this suggests that perhaps the profitability should simply be coded as “loss” or “profit” - and the model could be improved further.

4. We have a new film that has been released, what does your model suggest its gross domestic profit will be? What is the 95% uncertainty interval on your estimate

The movie is:

Kung Fu Panda 4

RottenTomatoes: 78

Audience Score: 88

Open week profits: 5400 USD per cinema

Number of theatres: 3300 cinemas showed the film in week 1

Profitability: Average

OK - we can use our model to predict the gross domestic profit:

newdata <- data.frame (Name="Kung Fu Panda 4", RottenTomatoes=78,

AudienceScore=88, TheatersOpenWeek = 3300,

Log_BOAverageOpenWeek=log(5400),

Profitability = "Average")

newdata$Profitability <- as.factor(newdata$Profitability)

Ln_DomesticGross <- predict(finalmodel,newdata=newdata,

interval="prediction",level = 0.95)

# Back transform

DomesticGross <- exp(Ln_DomesticGross)

DomesticGross## fit lwr upr

## 1 62.09127 39.11226 98.57078Our model suggests that the film will make $62,091,270 USD during its year of release. Or to put it in terms of confidence intervals, we are 95% certain that KungFu Panda 4 will make between $39.1 Million and $98.5 Million USD during its year of release.